Multi-environment Cloud Infrastructure with Terraform & Azure DevOps

Author:

Rollend Xavier

Published: July 13, 2022

6 minutes to read

We worked on a recent project where Terraform was used to provision the Azure infrastructure and I will explain the process, and how we advance towards creating a ‘Multi-environment Cloud Infrastructure’.

Multi-environment

Working on large projects requires several environments, such as DEV, SIT, UAT, PROD, etc and it is always good to have non-prod and prod as like each other as possible. It will help us in identifying the failures early and reduces the blast radius. So, a single pipeline that manages these environments with dependencies is a good way to achieve that.

Terraform

Terraform is rapidly becoming a matter of fact for creating and managing cloud infrastructures by using declarative code. It has many ways for managing multiple environments, maintaining code re-usability, resource state management, etc.

Monolithic vs Modular Terraform Configurations

Monolithic story

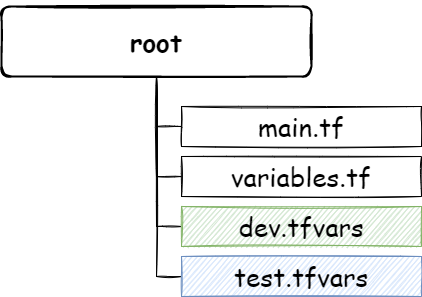

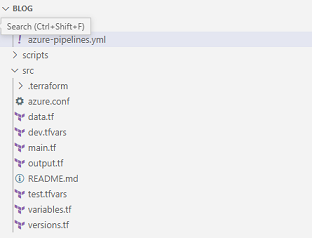

We started initially with a single main.tf file creating all the resources inside the root directory, managed with a single state file. That worked well, as we were only concentrated on making the things work, like creating the workspace, pipelines, variables, Azure authentication, etc. All went great, our development & test environments are up and running, automated through azure pipelines.

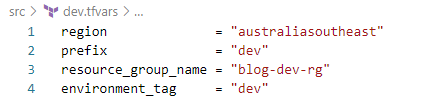

If you look into the above source, you can see two files, dev.tfvars and test.tfvars responsible for providing separation between the environments. Simply, you will provide dev.tfvars for provisioning your development environment and test.tfvars for the test environment. They will fill the variables.tf file with environment-specific values like resource groups, environment tags, prefix, etc.

Your terraform plan and apply commands will look like the below for the development environment and have to configure the pipeline to pass the environment-specific .tfvars file.

terraform plan -var-file="./dev.tfvars"

terraform plan -var-file="./dev.tfvars"

Pros

- You can just copy-paste the

.tfvarsfile to create any number of environments, say, for uat you just need to create auat.tfvarsfile.

Cons

- Complex to handle if resources are different in each environment, like, you may create only a single app service plan in development, where you may need multiple in production, etc.

Note: You will have to keep separate remote states per environment, which can be pointed through pipelines.

Modular Terraform Configurations

So, that was our monolithic story, and we always kept in mind to restructure Terraform configurations to leverage the ‘Multi-environment Cloud Infrastructure’. There are various ways to split your main.tf as it may become hard to manage as you add more environments, and a few of them are

-

Workspaces - Terraform CLI way to toggle between multiple environments. We didn’t choose this method, as there were a few risks including, the chances of accidentally performing operations in the wrong environments, cannot handle if environments are not like each other, etc.

-

Terragrunt Terragrunt is a popular terraform wrapper library that solves some of these issues, keeping IaC DRY and we kept it as our secondary option for this project.

-

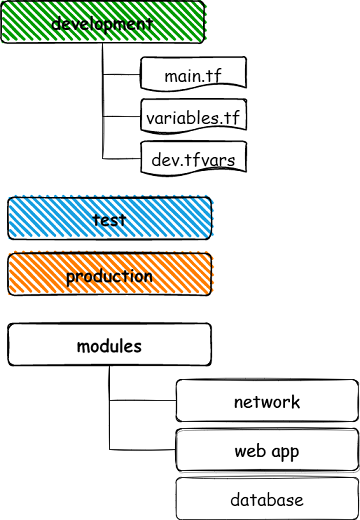

Modules & Directories - We choose this method, creating separate directories for each environment, with re-usable modules, which gave us a lot of confidence in maintaining flexibility between the environments.

Modules & Directories

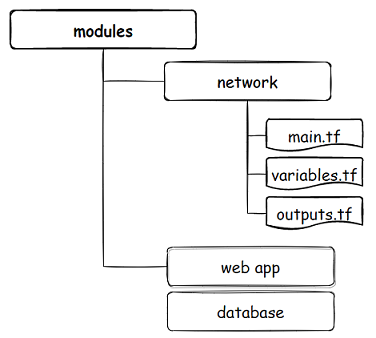

Modules are terraform ways to reuse your code, and keep it DRY, helping you to create multi-environment infrastructures where code is more clean, readable, and isolated. They are directories, a block of terraform that can source directory stored “.tf” configurations and can pass variables.

We combined the concept of directories and modules to create our multi-environment cloud infrastructure where ‘each environment is isolated by directory’ and the environments can access the modules to create the required infrastructure.

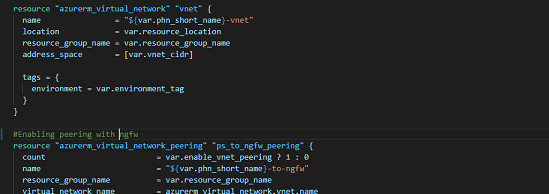

The below image will show how a main.tf looks inside your network module folder

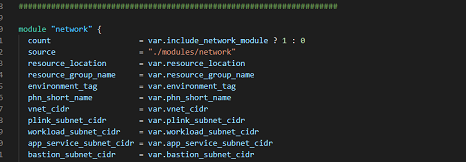

Inside your development folder, you can see a main.tf file, where you will call the required modules and pass the variables to create those modules.

We kept a .tfvars file per environment, and the advantage was, that it looked cleaner than hardcoding the resources name in your main files and made it easy to replicate the environments (just a copy-paste of your main.tf file). The major advantage was, that if you have 2 or more similar environments, you can keep a single main.tf and with environment-specific tfvar files and can configure your pipeline to pass those variables to the main.tf file.

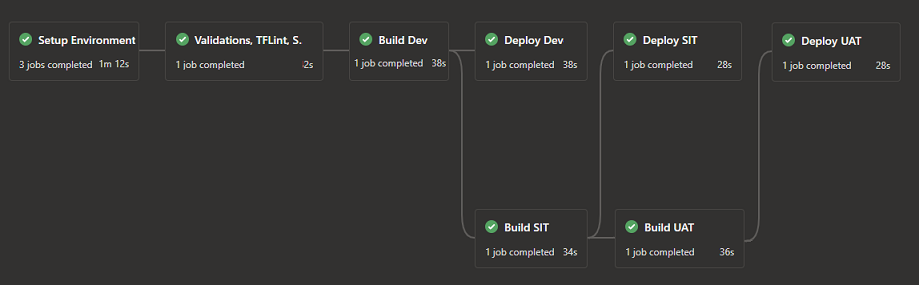

Pipelines

All the above, explains how terraform is structured to handle multiple environments, next comes the pipeline which plays the major role in handling build and deployments into these environments.

We have used Azure DevOps Yaml Pipeline to deploy the resources, and the basic steps required in your Terraform YAML CI/CD pipeline are below.

-

Init - terraform validate, init, lint, tfsec etc.

-

Build – terraform plan

-

Deploy – terraform apply

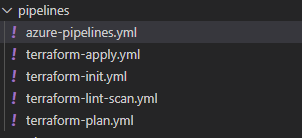

We have split our YAML files to make them more readable and reusable and combined them using Azure DevOps YAML Templates

The below shows a sample terraform plan & apply pipeline deploying into multiple environments. You can configure your stage dependencies, approvals, triggers, etc in your pipelines based on your requirements inside YAML scripts.