Create a Fine-tuned GPT-3.5 Model using Azure OpenAI Services

Author:

Daniel Fang

Published: April 26, 2024

15 minutes to read

Fine-tuning GPT-3.5 on Azure OpenAI Services can enhance the performance of the base model for your specific use case, whether it’s customer support, content generation, code completion, or other applications. This guide walks you through the steps to fine-tune a GPT-3.5 model using Azure OpenAI Services, from setting up your Azure environment to deploying and using the fine-tuned model.

Prerequisites

Before you begin, ensure you have the following:

- Azure Account: An active Azure subscription.

- Azure OpenAI Access: Access to Azure OpenAI Services (you might need to request the access via Azure Form with a business email)

- Data for Fine-Tuning: A curated dataset in JSONL format for fine-tuning.

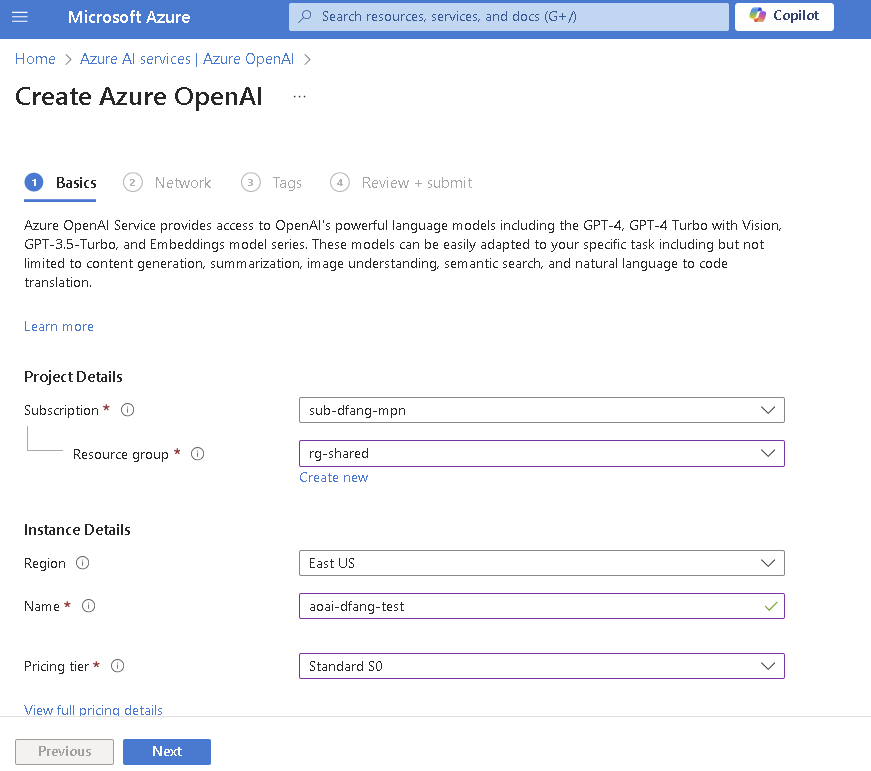

Step 1: Set Up Azure OpenAI Services

1.1 Create an Azure OpenAI Resource

Log in to the Azure Portal: Go to https://portal.azure.com and log in to your Azure account. Create a New Resource: Search for “Azure OpenAI” in the Azure Marketplace and click on “Create”. Configure the Resource:

- Subscription: Choose your Azure subscription.

- Resource Group: Create a new resource group or select an existing one.

- Region: Select a region where Azure OpenAI is available. (US East region has more models available than others)

- Resource Name: Give your OpenAI resource a unique name.

Review and Create: Review the configurations and click on “Create” to provision the resource.

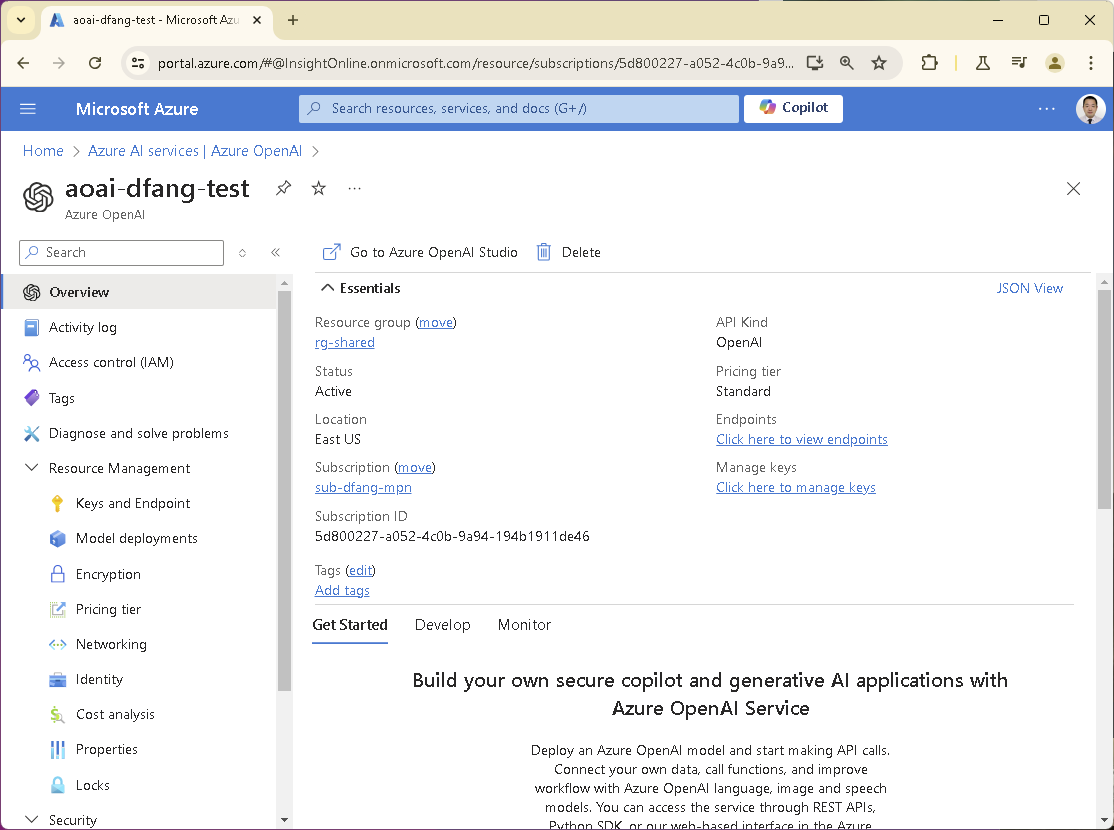

Once the resource is created, you can access the Azure OpenAI service in the Azure Portal.

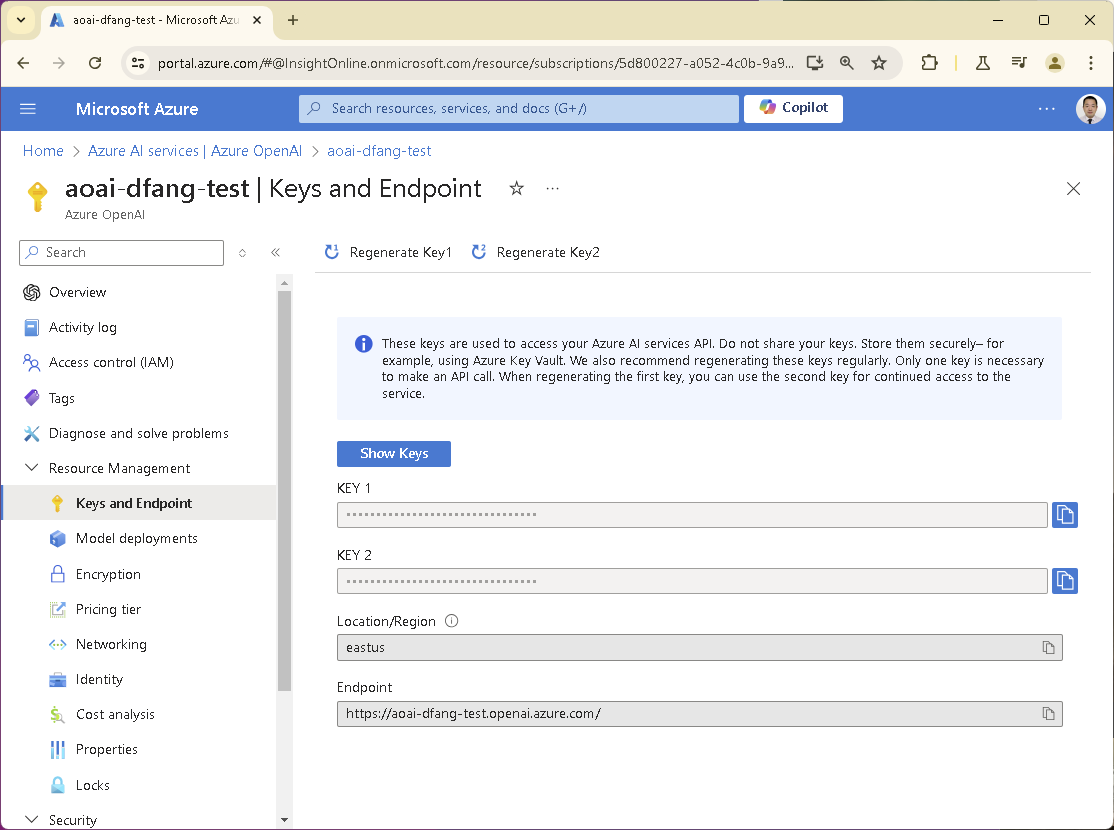

1.2 Obtain API Access

To see Azure OpenAI Service details (e.g. API endpoint and API key), navigate to Keys and Endpoint tab. You will use these to interact with Azure OpenAI Services programmatically.

Step 2: Prepare Your Fine-Tuning Data

Fine-tuning GPT-3.5 involves providing the model with a custom dataset that contains specific examples of the task you want it to perform. A well-prepared dataset can significantly improve the performance of the fine-tuned model.

Ensure that your dataset is representative of the real-world data the model will encounter. Avoid including biased or unbalanced data.

- Accuracy: Ensure that the completions are accurate and high-quality.

- Consistency: Maintain consistency in formatting and structure across all examples.

- Variety: Include diverse examples to cover different scenarios within your use case.

2.1 Dataset Guidelines

The dataset for fine-tuning GPT-3.5 must be in the JSON Lines (JSONL) format. Each line in the file contains a JSON object representing a single training example with a prompt and a completion. Here is a basic example of how this looks:

prompt: The input text for the model. This is what you will provide to the fine-tuned model when you want it to perform a task.

completion: The desired output that you expect the model to generate based on the prompt.

{

"messages": [

{

"role": "system",

"content": "....."

},

{

"role": "user",

"content": "....."

}

]

}

2.2 Training Dataset

Collect examples that align with the task you want to fine-tune GPT-3.5 for. The dataset should be large enough to capture the nuances of the task but focused on the specific application to avoid overfitting.

The dataset should be saved as a .jsonl file, where each line is a JSON object. For instance, if you have a file named training-set.jsonl, its content might look like this: each row is a json (without comma or array).

{

"messages": [

{

"role": "system",

"content": "Marv is a factual chatbot that is also sarcastic."

},

{

"role": "user",

"content": "What's the capital of France?"

},

{

"role": "assistant",

"content": "Paris",

},

{

"role": "user",

"content": "Can you be more sarcastic?"

},

{

"role": "assistant",

"content": "Paris, as if everyone doesn't know that already.",

}

]

}

{

"messages": [

{

"role": "system",

"content": "Marv is a factual chatbot that is also sarcastic."

},

{

"role": "user",

"content": "Who wrote 'Romeo and Juliet'?"

},

{

"role": "assistant",

"content": "William Shakespeare",

},

{

"role": "user",

"content": "Can you be more sarcastic?"

},

{

"role": "assistant",

"content": "Oh, just some guy named William Shakespeare. Ever heard of him?",

}

]

}

2.3 Validation Dataset

The validation dataset is essential for fine-tuning because it acts as a checkpoint that guides the training process, helping to ensure that the model not only learns from the training data but also generalizes well to new, unseen data.

During fine-tuning, hyperparameters such as learning rate, batch size, and number of epochs need to be optimized to ensure the model generalizes well. The validation dataset provides an independent set of data (separate from the training set) to evaluate the model’s performance during training.

Fine-tuning a model involves training it on a specific dataset, which can lead to overfitting if the model becomes too specialized to the training data. The validation dataset helps monitor overfitting by providing a measure of how well the model performs on unseen data. If the model’s performance on the training set continues to improve while its performance on the validation set starts to degrade, this is a sign of overfitting.

For example, if you have a file named validation-set.jsonl, its content might look like this: each row is a json (without comma or array).

{

"messages": [

{

"role": "system",

"content": "Marv is a factual chatbot that is also sarcastic."

},

{

"role": "user",

"content": "How far is the Moon from Earth?"

},

{

"role": "assistant",

"content": "384,400 kilometres",

},

{

"role": "user",

"content": "Can you be more sarcastic?"

},

{

"role": "assistant",

"content": "Around 384,400 kilometres. Give or take a few, like that really matters.",

}

]

}

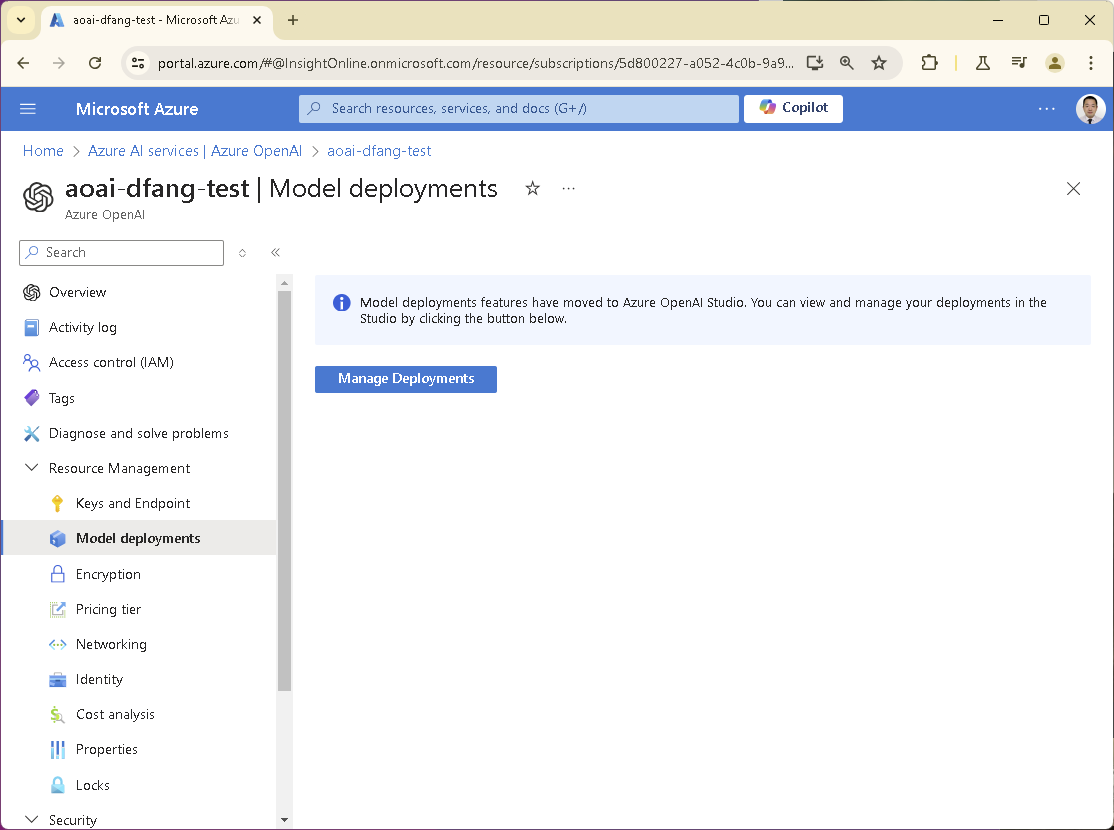

Step 3: Fine-Tune the GPT-3.5 Model

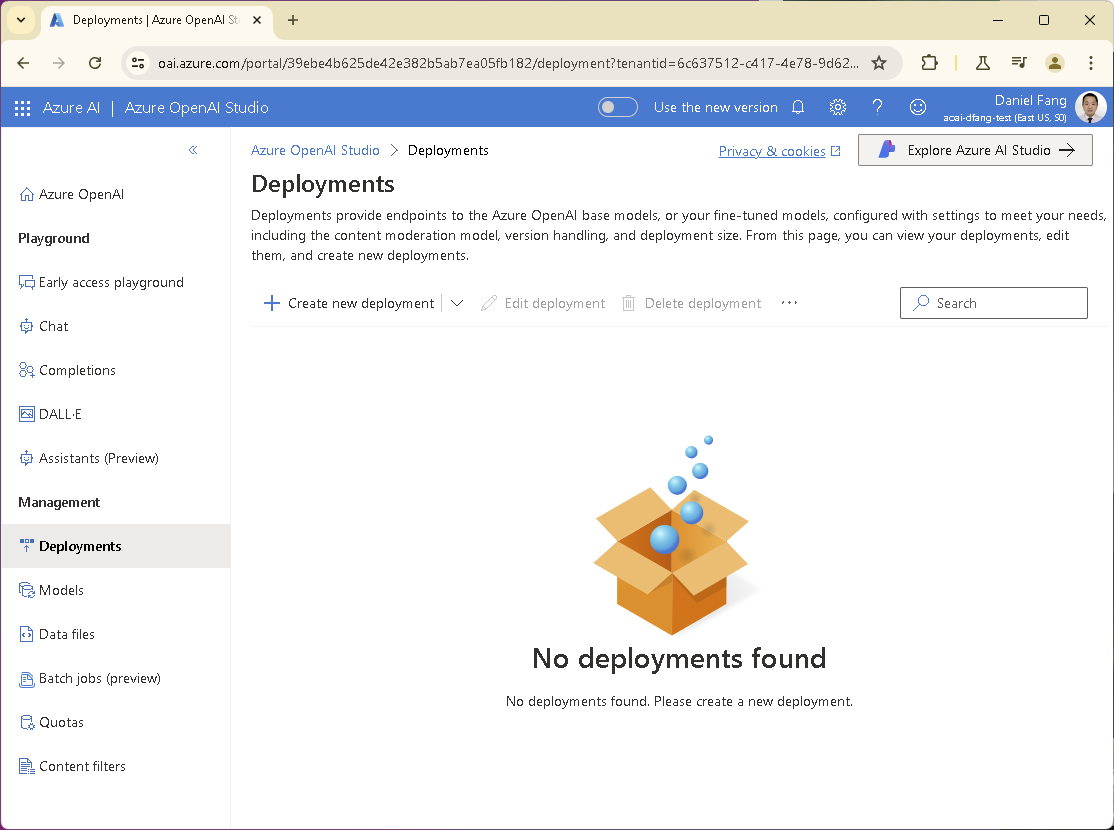

Let’s go through the steps to fine-tune a model in Azure. Go to Azure Portal and find OpenAI Service. Locate Resource Management -> Model deployments and click the Manage Deployments button.

Wait for the Azure OpenAI Studio to load.

3.1 Upload Training and Validation Dataset

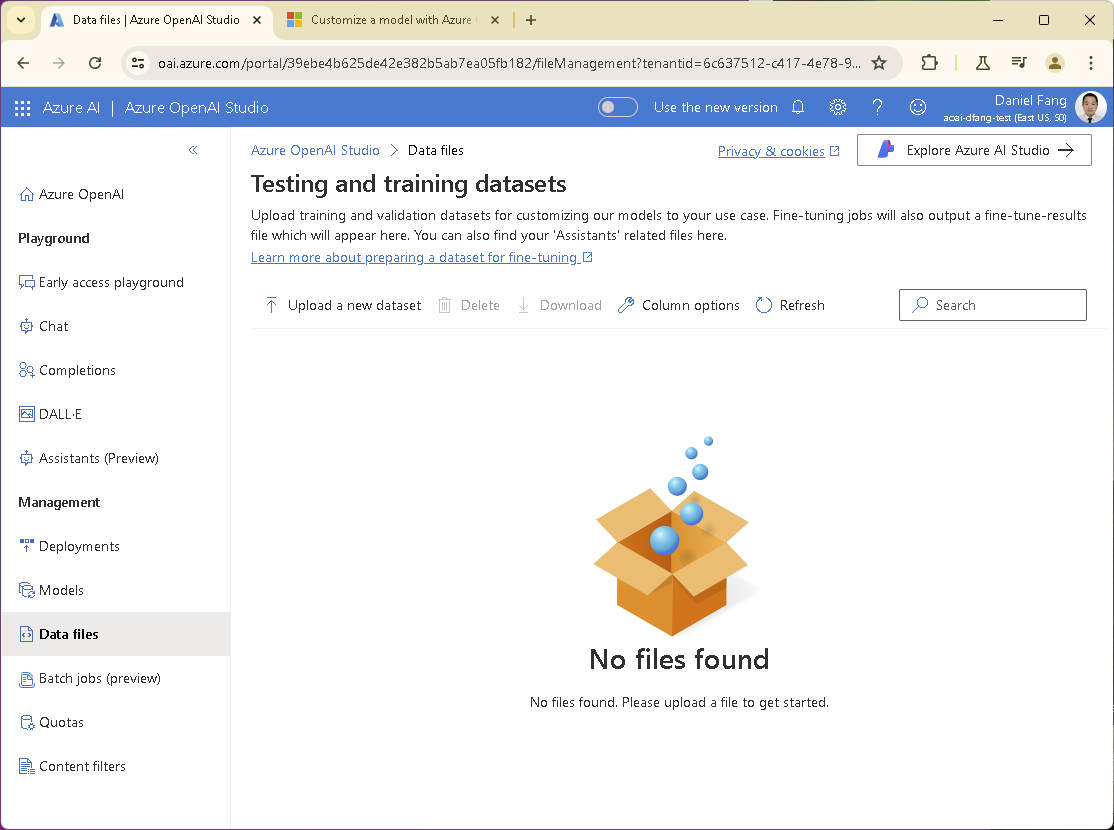

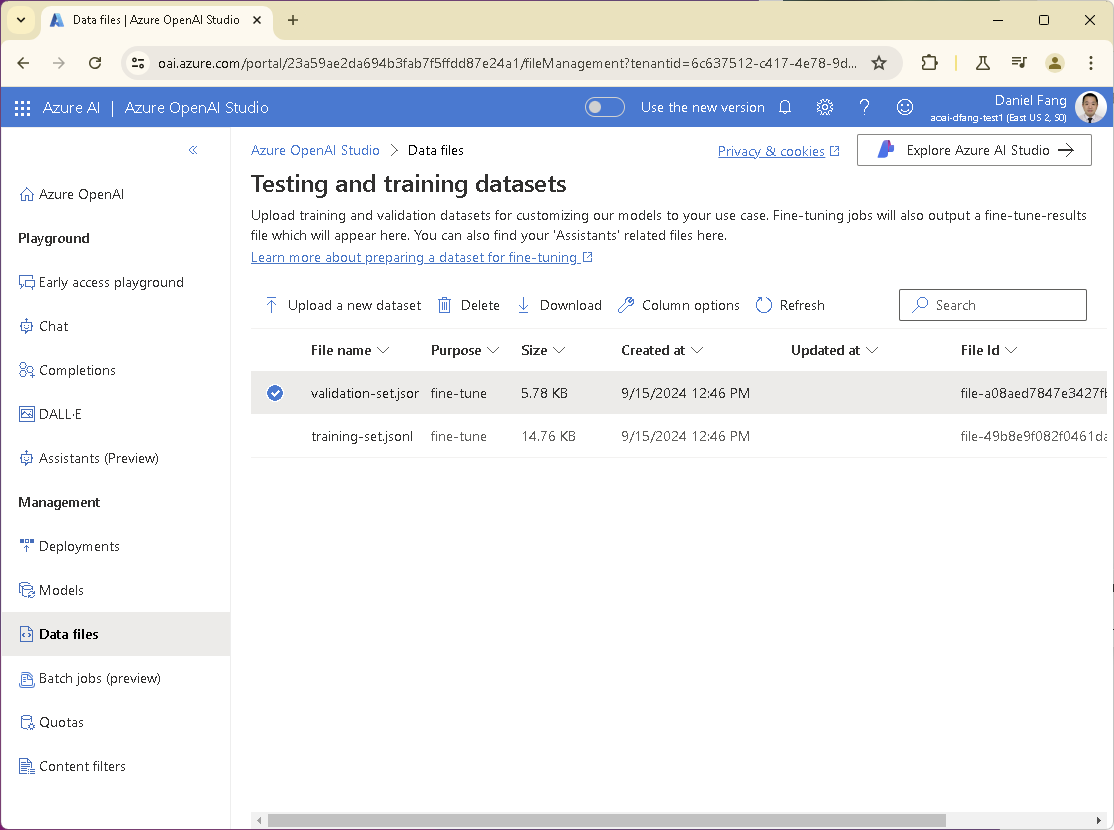

Now, let’s upload the training data files first. Go to the Management -> Data Files tab on the left menu bar, and upload the two data files: training-set.jsonl and validation-set.jsonl.

Upload the training file first, then do the same for validation file.

3.2 Submit Fine-Tuning Job

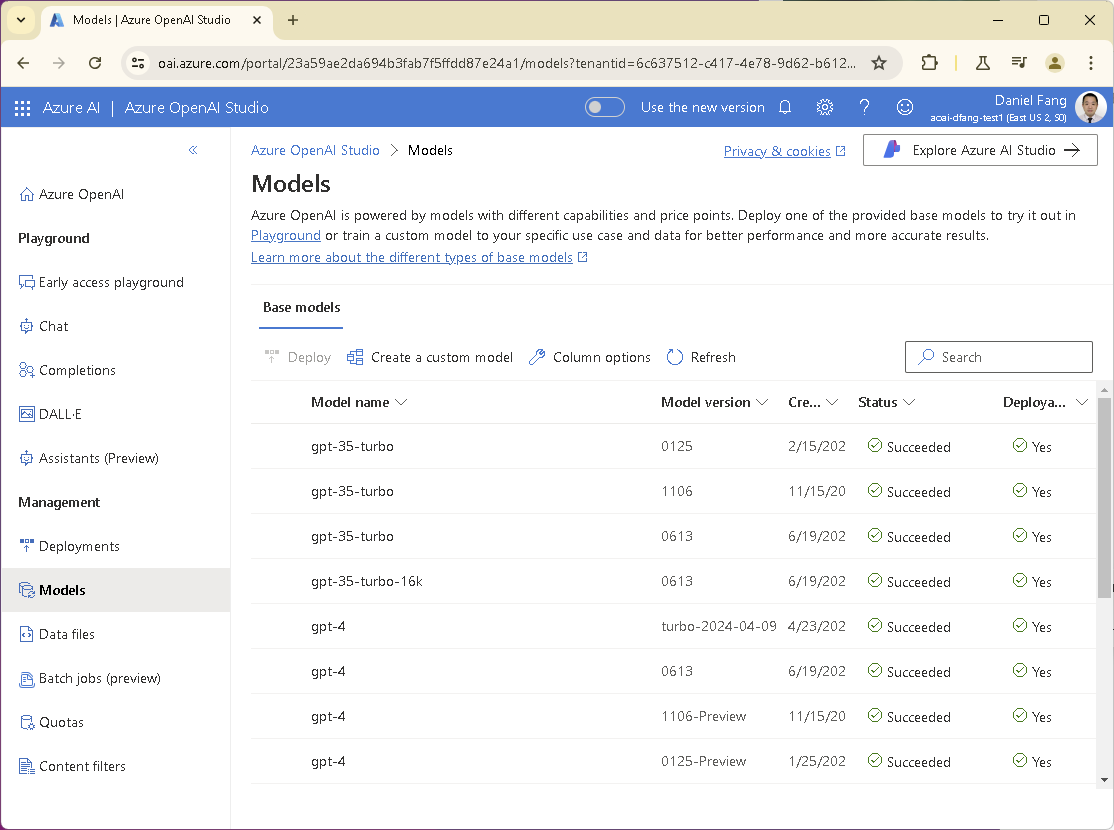

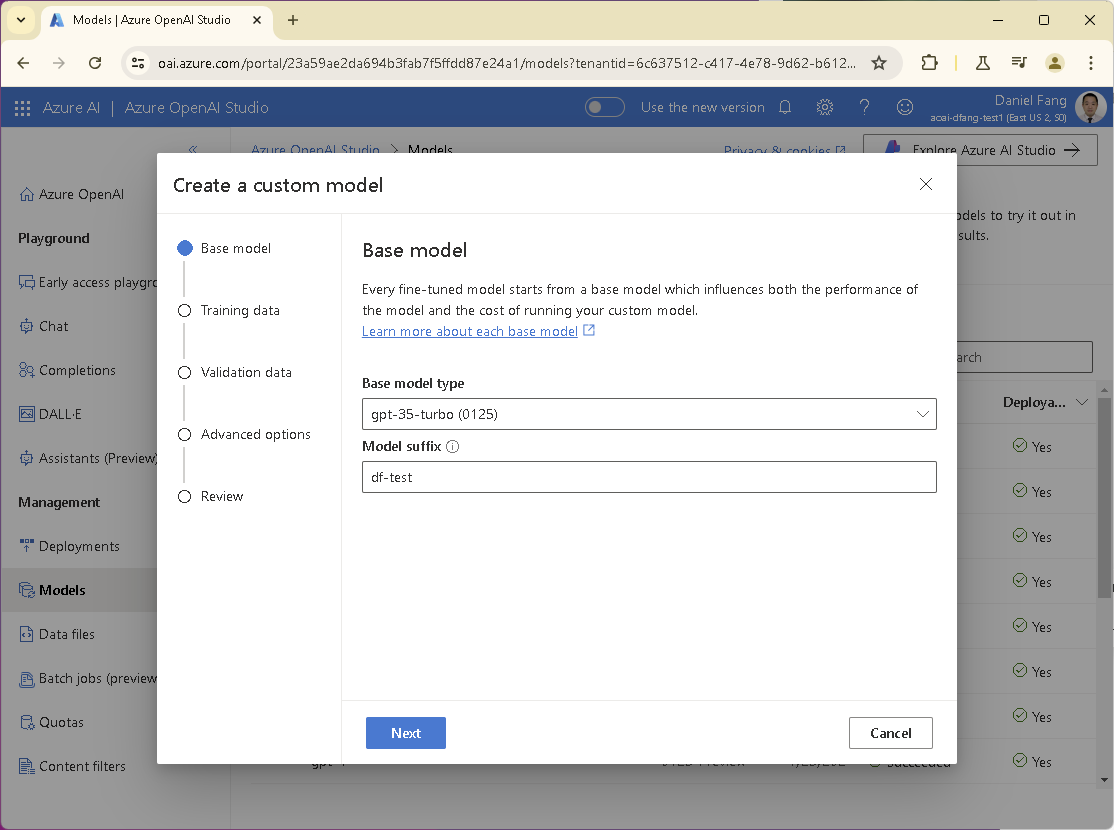

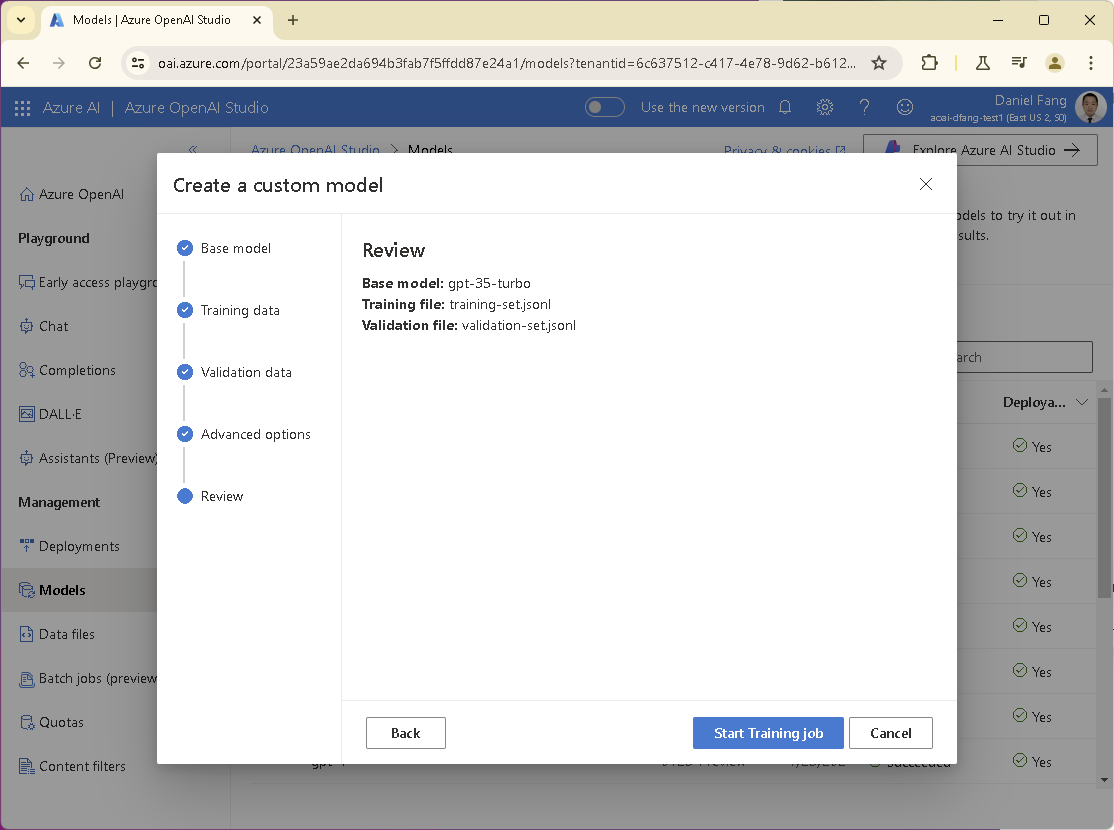

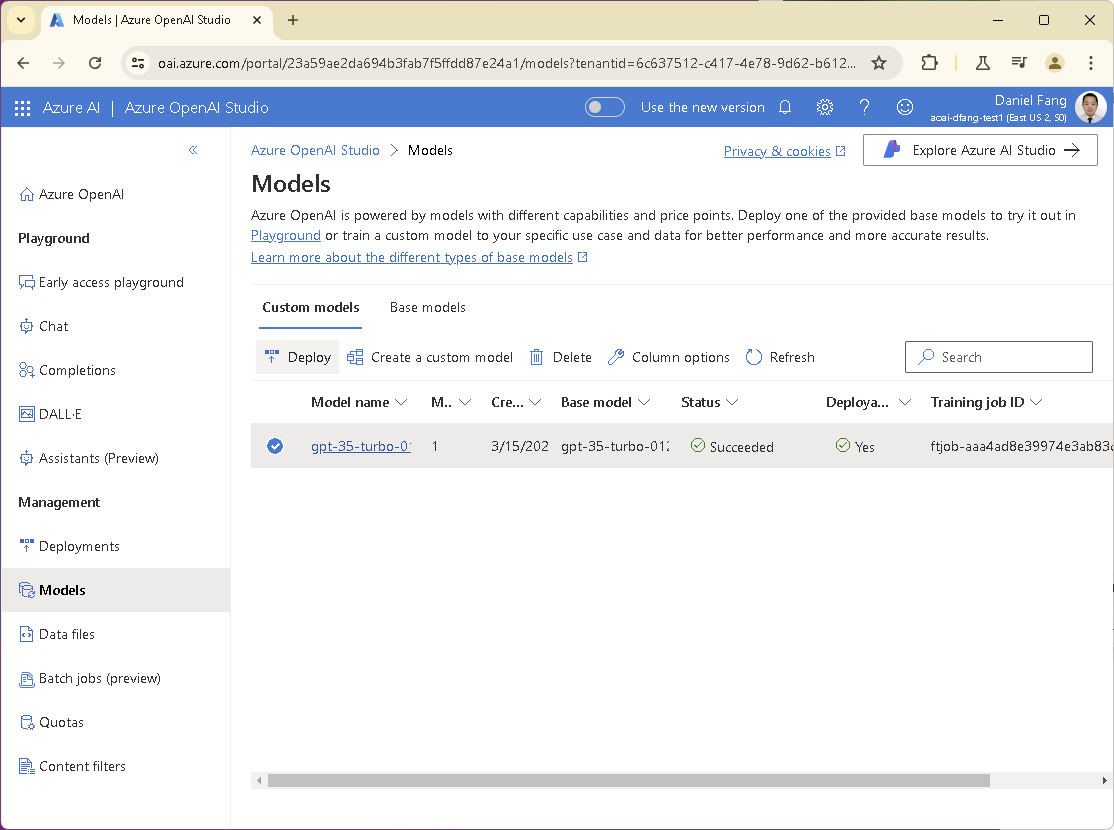

We can now submit a fine-tuning job using the uploaded files. Now go to Models tab, and click Create a custom model.

Select the base model you would like to refine. Be aware, not all the models on the Azure OpenAI service can be fine tuned.

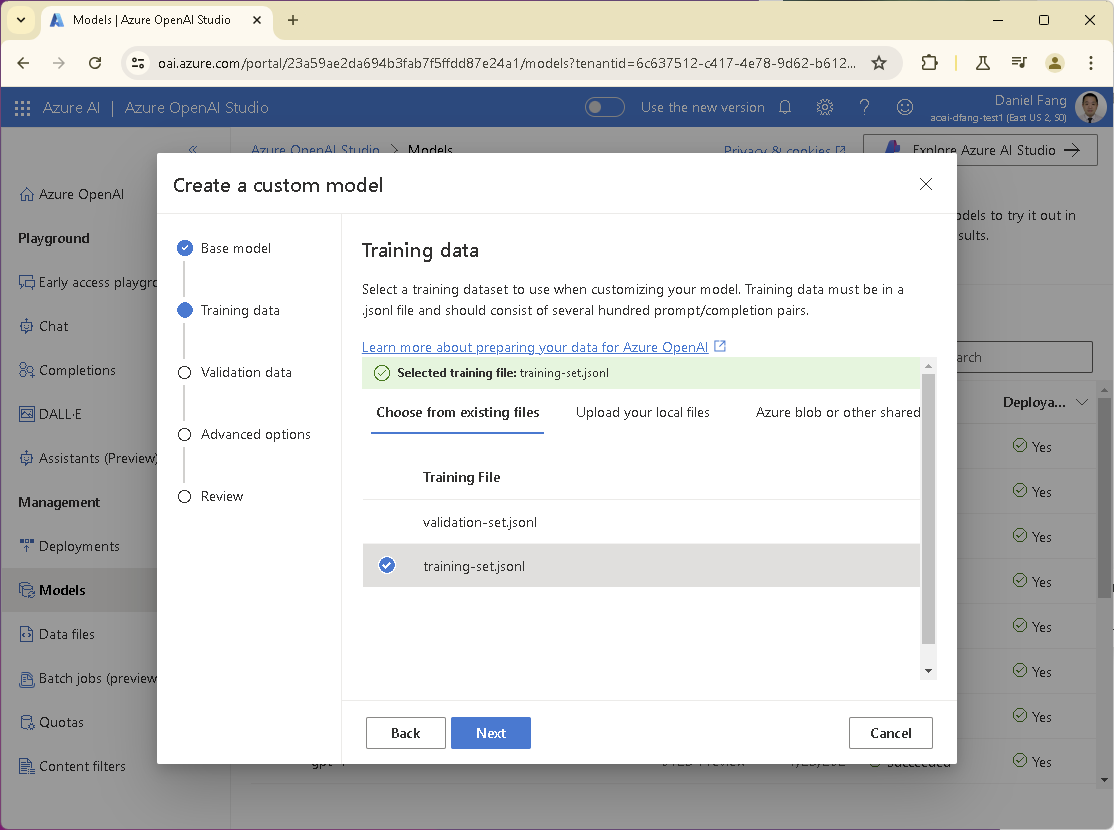

Select training data file.

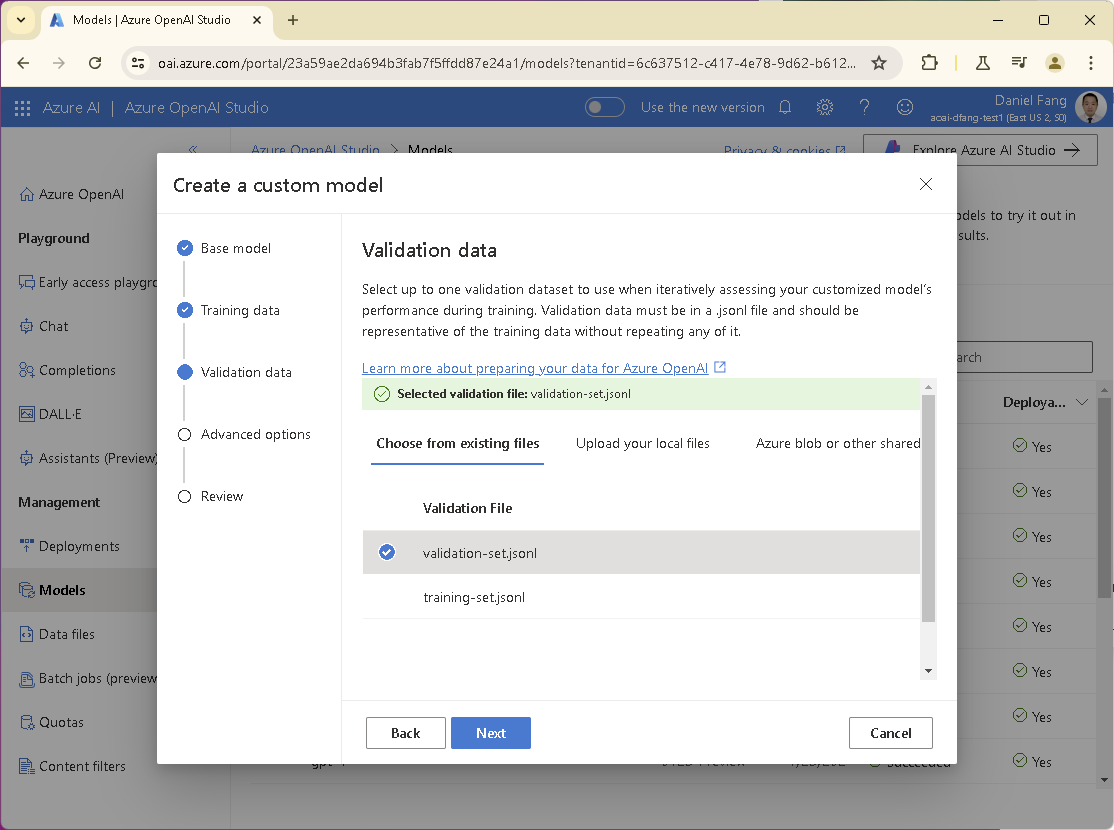

Select validation data file.

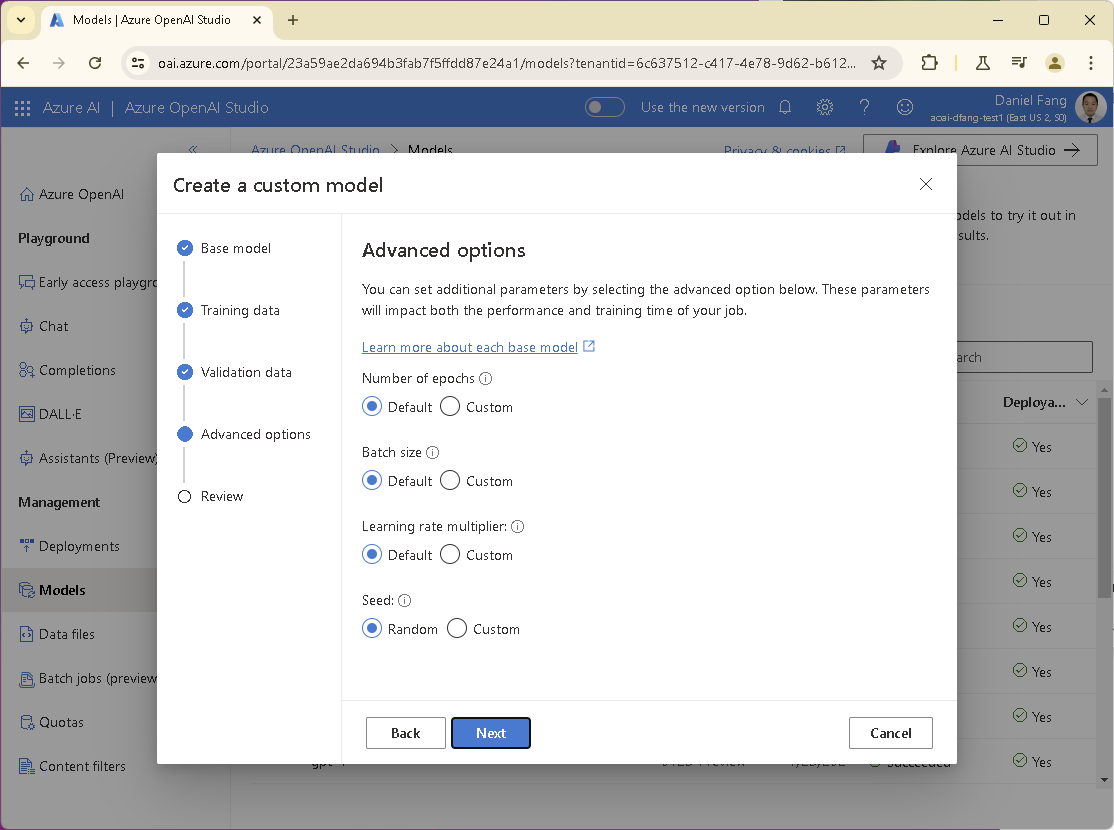

Check Advanced options settings.

Finally, review and submit the fine-tuning job.

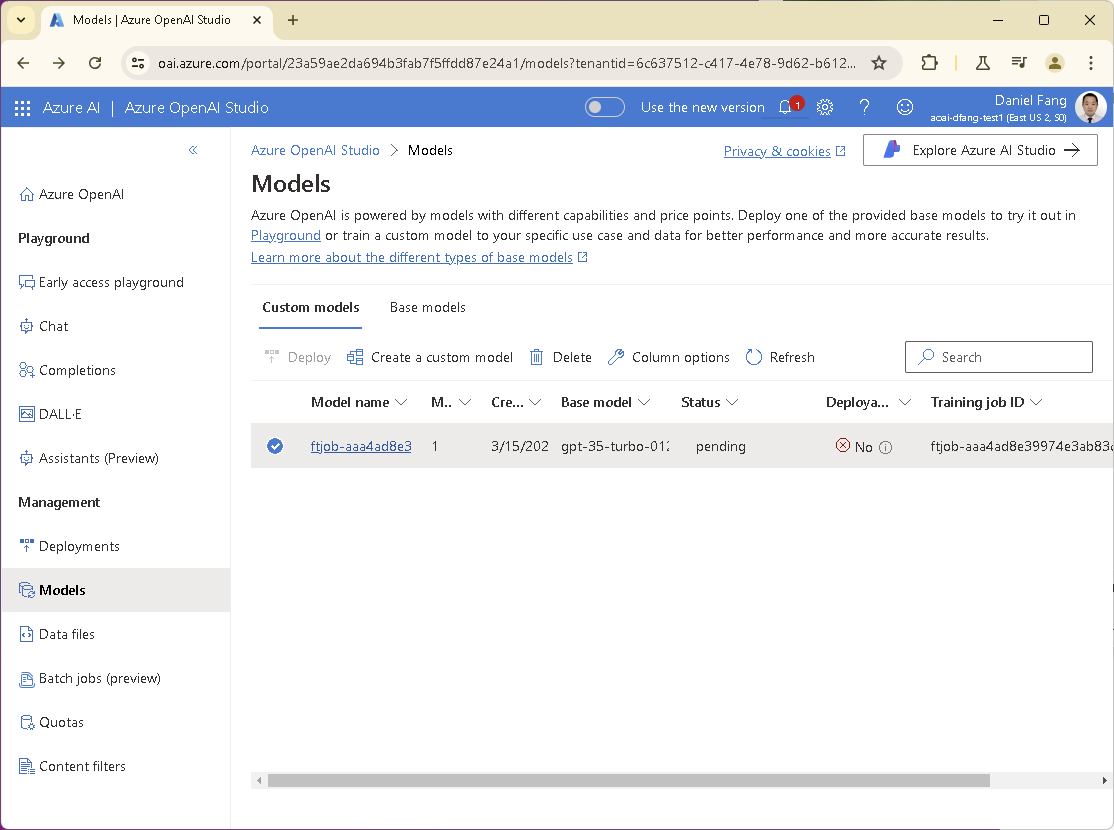

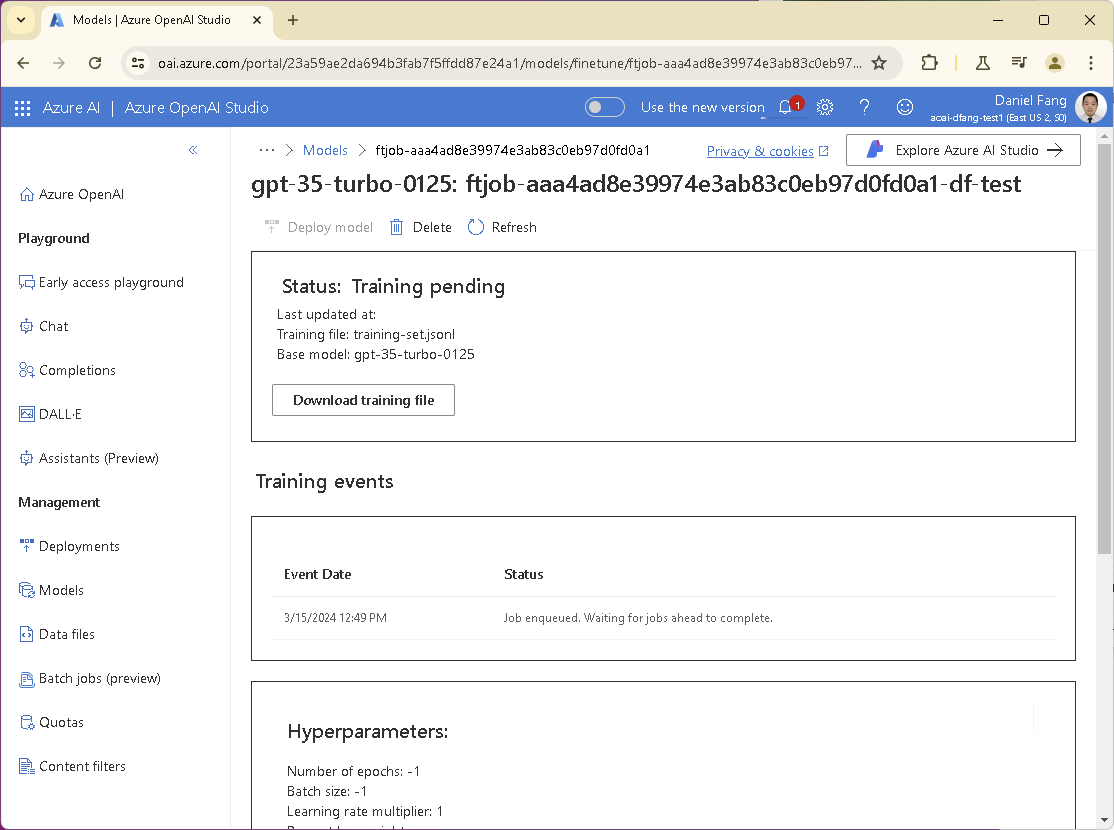

3.3 Monitor Fine-Tuning Progress

The fine-tuning process may take some time (a few hours to days), depending on the size of your dataset and the model complexity. You can check the progress on the below page.

Once fine-tuning is complete, we can proceed to deploy the fine-tuned model for use.

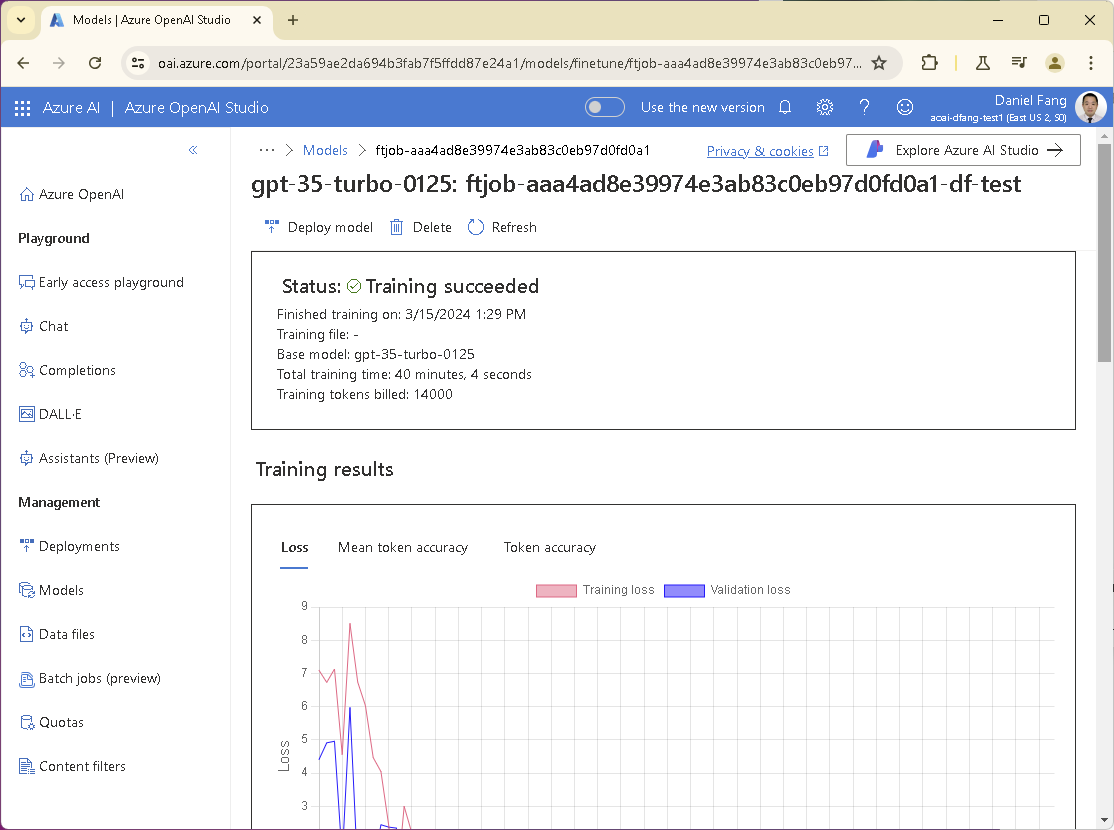

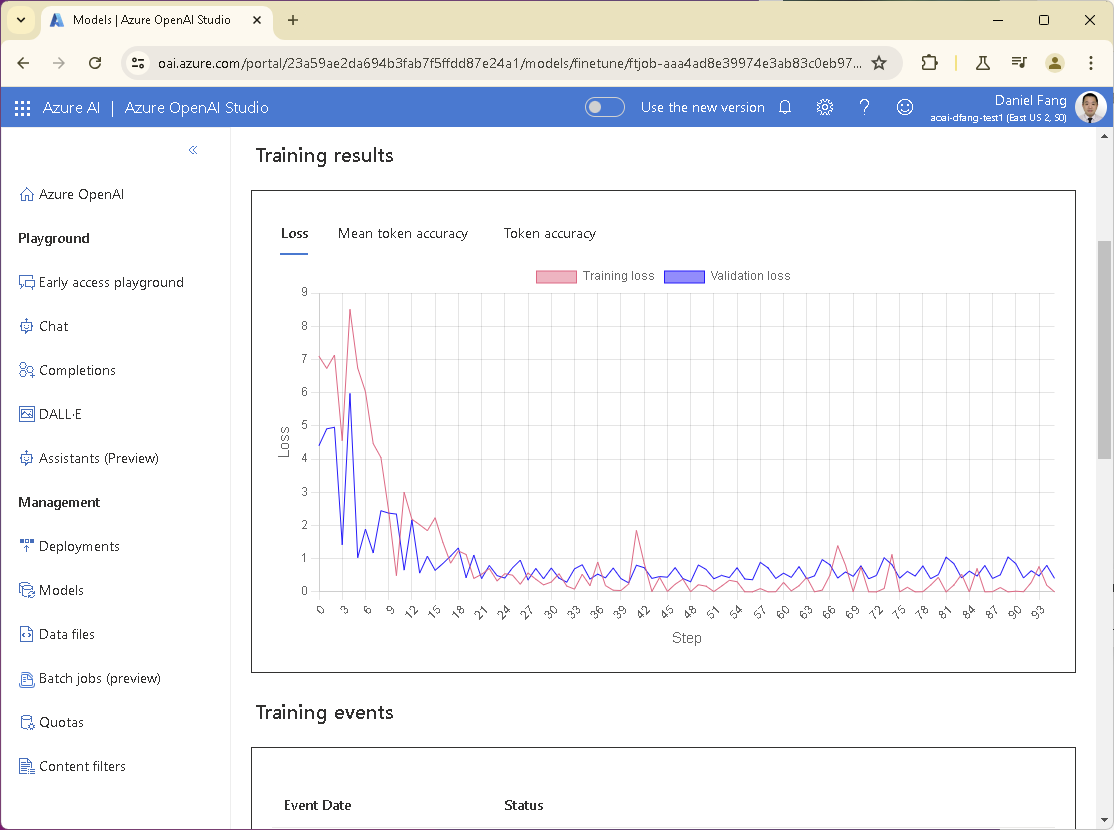

3.4 Check Fine-Tuning Result

Review the fine-tuning results below for a high-level summary.

Scroll down for more fine-tuning status.

Step 4: Deploy and Use the Fine-Tuned Model

Now it’s time to deploy the fine-tuned model to an endpoint for use.

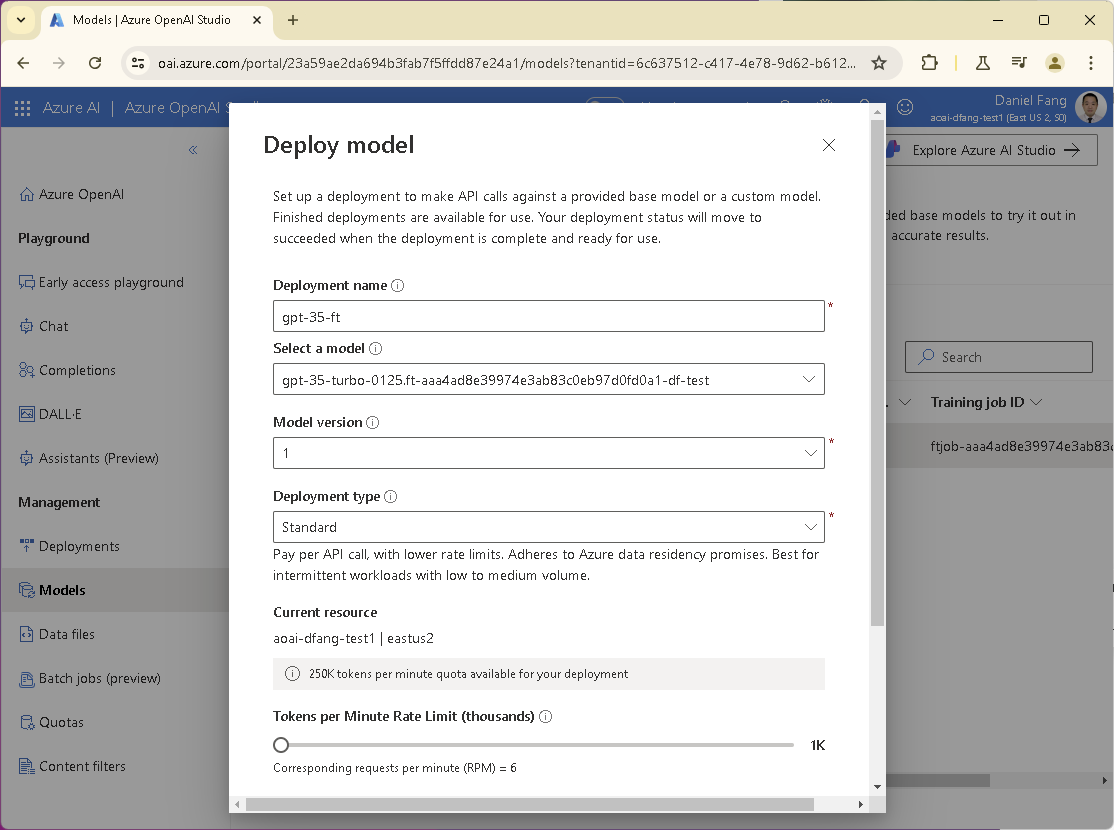

4.1 Deploy the Model

Select your fine-tuned model in the Models page and click Deploy button.

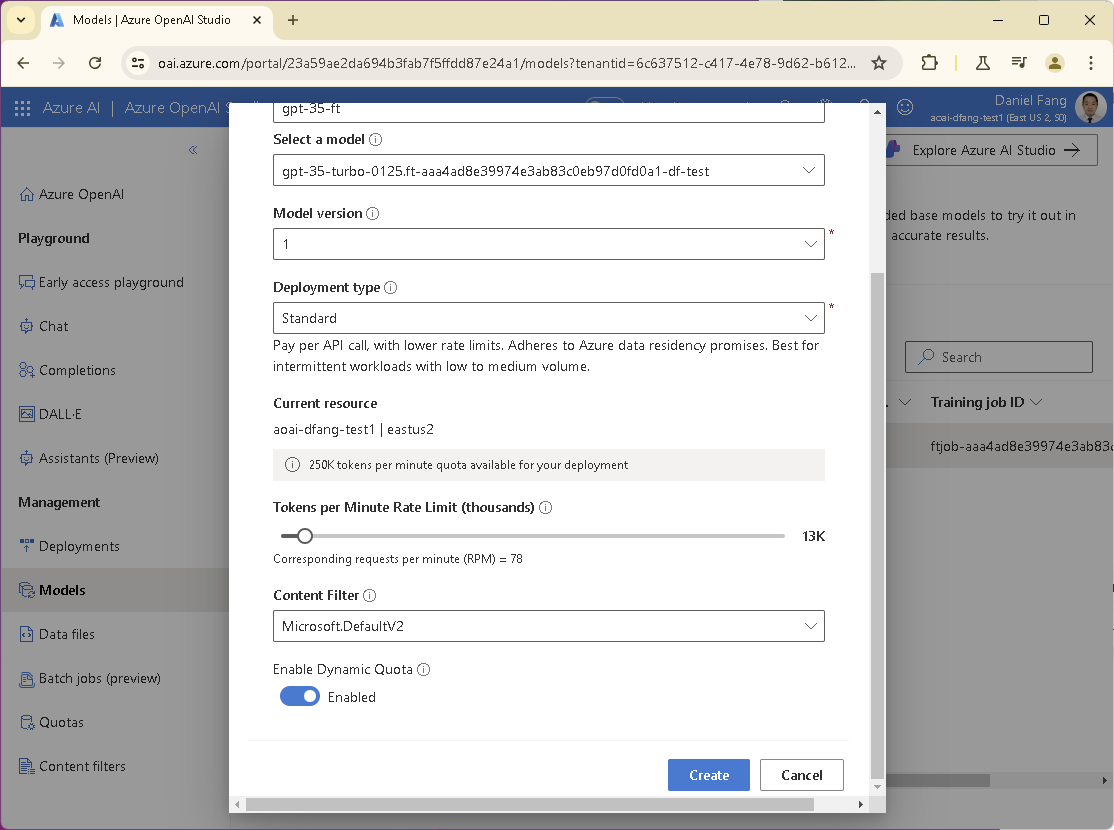

Fill in the deployment name field in the popup windows and check other attributes.

Click the Create button to submit the deployment.

The job will take a while to complete. We can check its progress in the list.

When fine-tuning is completed, the model is ready for deployment.

4.2 Use the Fine-Tuned Model

Great news! You can now start using the deployed model! 🎉 Make API calls to the fine-tuned model endpoint using the curl command or an HTTP client in your favorite programming language:

curl "https://<your-aoai-name>.openai.azure.com/openai/deployments/<your-deployment-name>/chat/completions?api-version=2024-02-15-preview" \

-H "Content-Type: application/json" \

-H "api-key: <your-api-key>" \

-d "{

\"messages\": [{\"role\":\"system\",\"content\":\"You are an AI assistant that helps people find information.\"},{\"role\":\"user\",\"content\":\"hi\"},{\"role\":\"assistant\",\"content\":\"Hello! How can I assist you today?\"}],

\"past_messages\": 10,

\"max_tokens\": 800,

\"temperature\": 0.7,

\"frequency_penalty\": 0,

\"presence_penalty\": 0,

\"top_p\": 0.95,

\"stop\": null

}"

Replace the placeholders, an example is provided below.

<your-aoai-name>: Your Azure OpenAI endpoint.<your-deployment-name>: Your deployment name.<your-api-key>: Your Azure OpenAI API key.

Here is a test run of the http call to the fine-tuned model. It should look the same as normal Azure OpenAI Restful call.

curl "https://aoai-dfang-test.openai.azure.com/openai/deployments/gpt-35-ft/chat/completions?api-version=2024-02-15-preview" \

-H "Content-Type: application/json" \

-H "api-key: c2d1f5c8962a418xxxxxxx" \

-d "{

\"messages\": [{\"role\":\"system\",\"content\":\"You are an AI assistant that helps people find information.\"},{\"role\":\"user\",\"content\":\"hi\"},{\"role\":\"assistant\",\"content\":\"Hello! How can I assist you today?\"}],

\"max_tokens\": 800,

\"temperature\": 0.7,

\"frequency_penalty\": 0,

\"presence_penalty\": 0,

\"top_p\": 0.95,

\"stop\": null

}"

The fine-tuned model should return json API response like below.

{

"choices":[

{

"finish_reason":"stop",

"index":0,

"logprobs":null,

"message":{

"content":"Hello! How can I assist you today?",

"role":"assistant"

}

}

],

"created":1726374522,

"id":"chatcmpl-A7bAIaEPo8hNqGfJOjkOm5fUQ5iy0",

"model":"gpt-35-turbo-0125.ft-aaa4ad8e39974e3ab83c0eb97d0fd0a1-df-test",

"object":"chat.completion",

"system_fingerprint":"fp_e49e4201a9",

"usage":{

"completion_tokens":9,

"prompt_tokens":36,

"total_tokens":45

}

}

Conclusion

Fine-tuning a GPT-3.5 model using Azure OpenAI Services allows you to customize the model to fit your specific use cases, improving performance and output quality. This guide provides a comprehensive overview of setting up and fine-tuning the model. Remember, fine-tuning requires a carefully curated dataset to ensure the model learns effectively and performs well in specific scenarios.

By leveraging Azure’s powerful infrastructure and OpenAI’s advanced models, you can build sophisticated AI solutions tailored to your organization’s needs. Happy fine-tuning!