Deploy Llama 3.2 Model on Azure using AI Studio

Author:

Daniel Fang

Published: September 21, 2024

7 minutes to read

The landscape of artificial intelligence is rapidly evolving, with large language models (LLMs) like OpenAI o1, Llama 3 and Mistral pushing the boundaries of what’s possible in natural language processing (NLP). Azure AI Services now supports a variety of open-source LLMs, providing developers with the tools to deploy and scale AI applications seamlessly.

In this blog post, we’ll walk you through deploying the Llama 3.2 model on Azure using Azure AI Studio. The steps outlined here can also be adapted for other supported open-source LLMs, allowing you to leverage the power of these models within Azure’s robust infrastructure.

Exploring Model Catalog

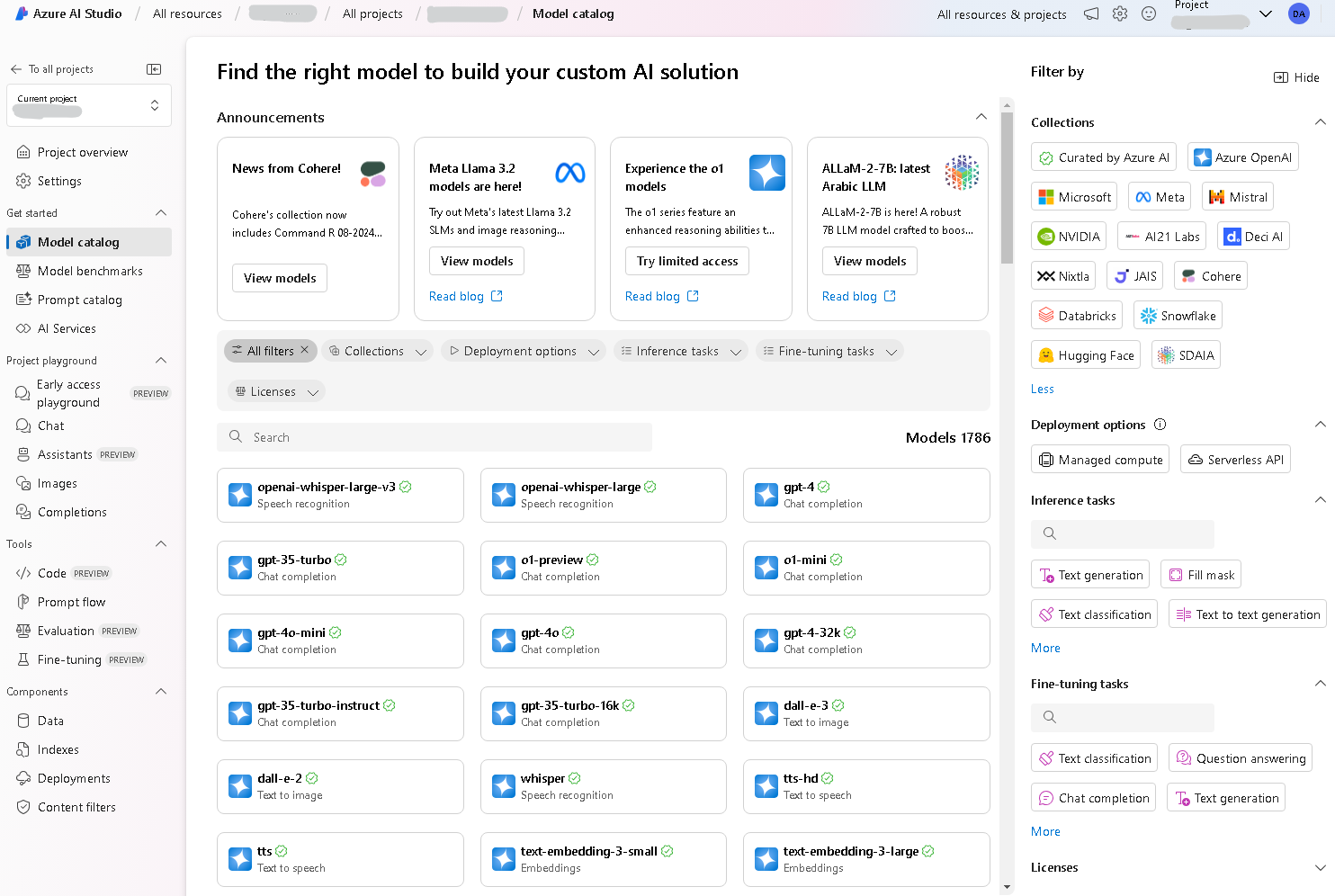

Azure AI Studio provides a comprehensive Model Catalog where you can browse and select models for deployment. Note that each Azure region has different sets of supported models in the Model Catalog.

To start, create a new Azure AI Studio and a new project in the East US region. In your project, go to the Model catalog tab under Get started. You’ll see a variety of open-source models. Click the All filters option, the Filter by panel will display a wide range of Collection like Meta, Mistral, NVIDIA, Hugging Face.

Then, select Meta in the filter, you will see about 44 models, including Llama-3.2 and Llama-2.

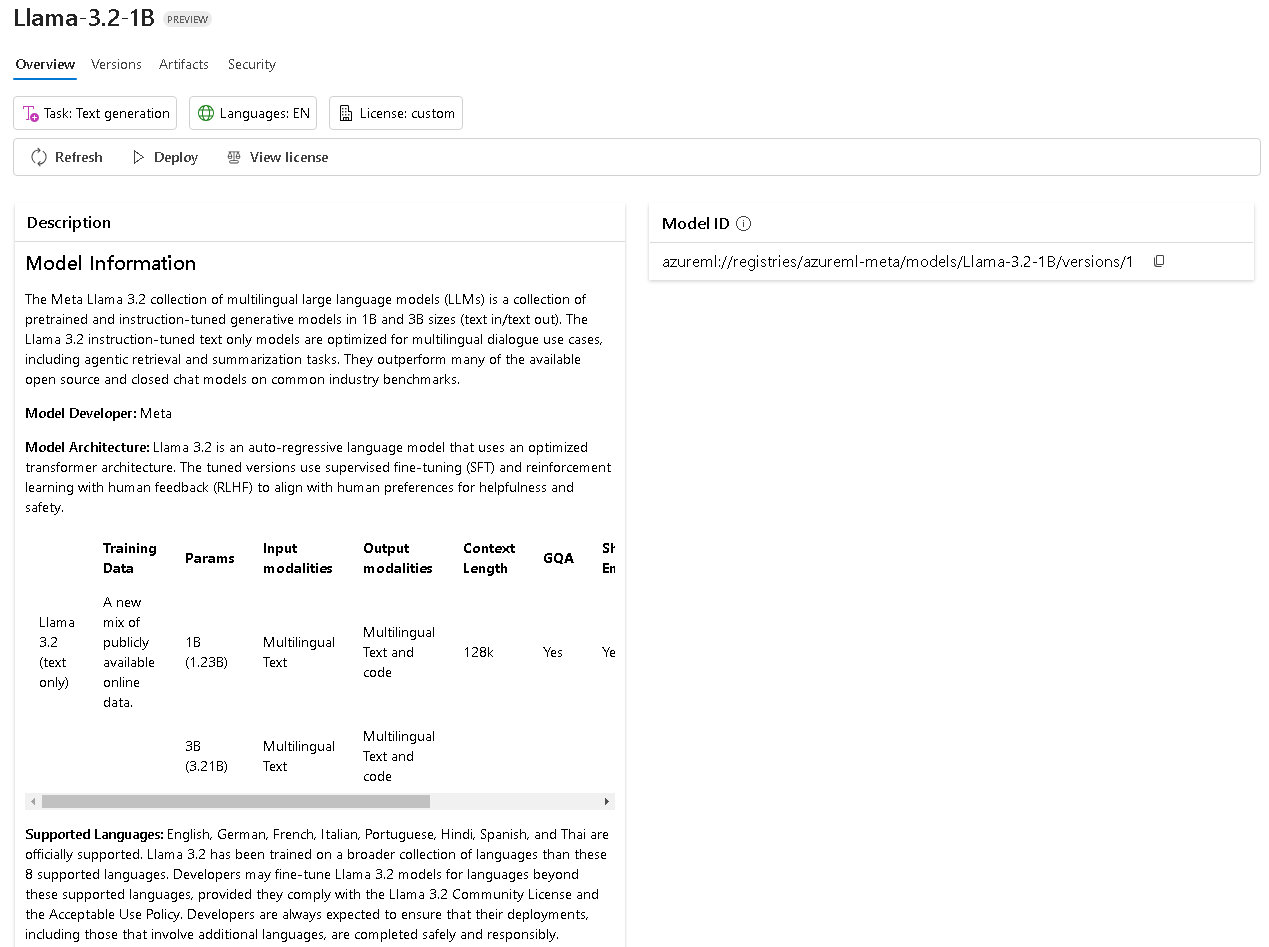

For this tutorial, we’ll choose Llama-3.2-1B. The details of Llama-3.2-1B is shown in the newly opened page with a description of the model.

Deploy Llama Model

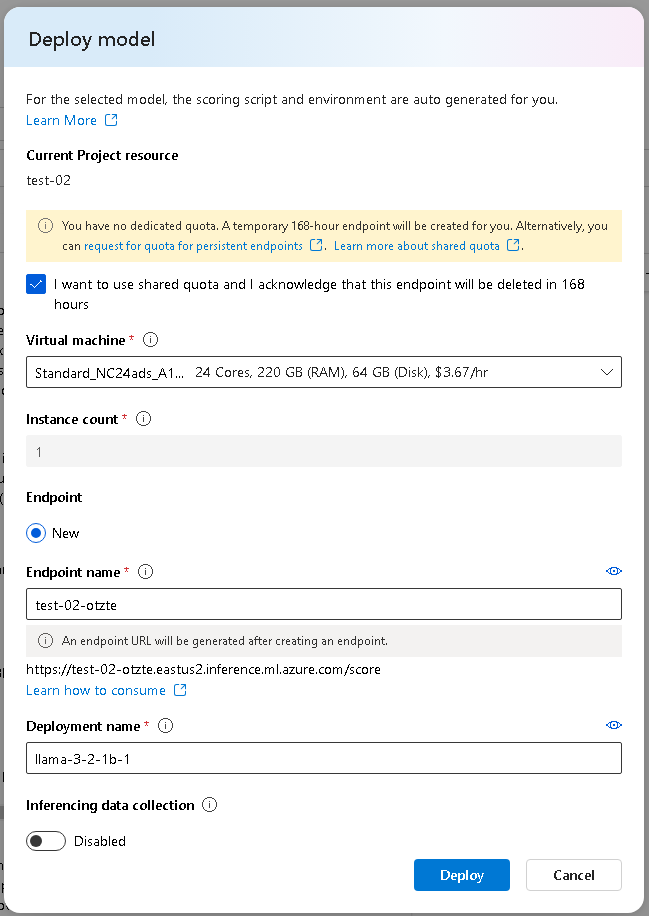

Now, let’s dive into deploying the Meta Llama model on Azure. On the model’s Overview page, click the Deploy button.

The Deploy model popup will appear. Choose virtual machine size and provide a unique name for your deployment Endpoint name. Click Deploy to start the deployment process.

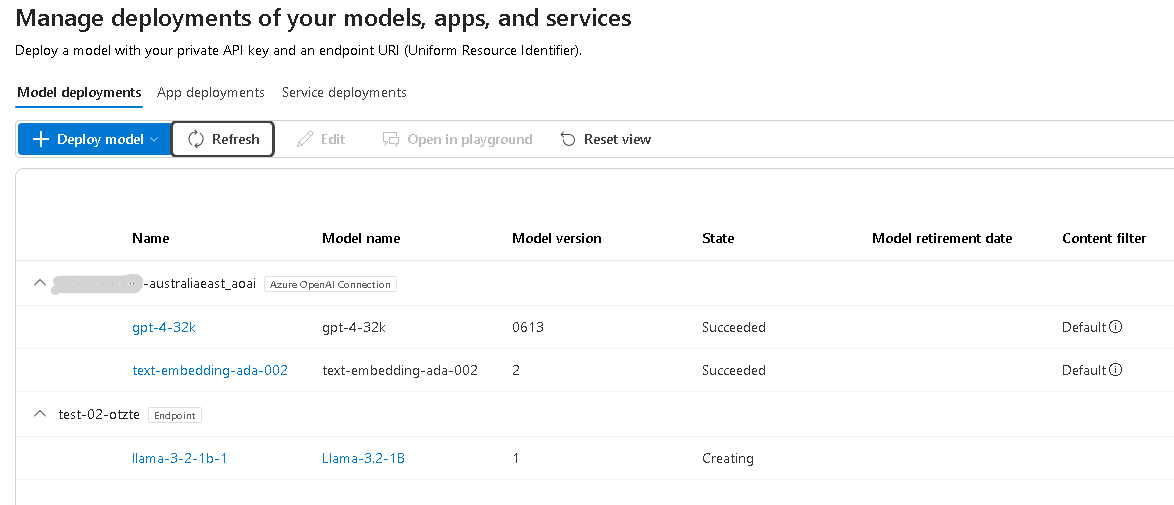

The deployment may take some time, depending on the model size and resources. Navigate to the Deployments tab in your project to monitor the progress.

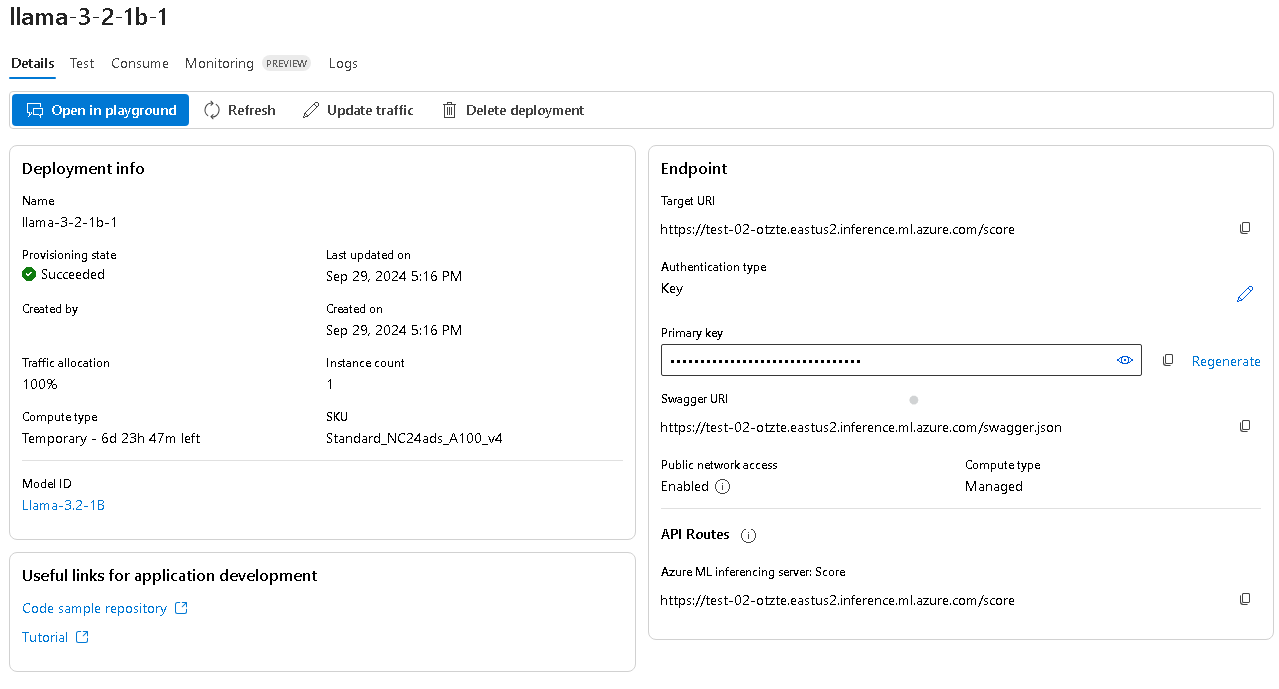

Once the deployment is completed, the Provisioning state will change to Succeeded. We can retrieve the endpoint details of the model in Details tab.

Test it out

With the model deployed, let’s test it to ensure it’s working correctly. We’ll use below Python script to send a request to the deployed model. Save the following code in a file named test.py.

import urllib.request

import json

import os

import ssl

question = "the weather is fine today."

print('Question:', question)

data = {

"input_data": [question]

}

body = str.encode(json.dumps(data))

url = '<YOUR_ENDPOINT_URL>'

api_key = '<YOUR_API_KEY>'

if not api_key:

raise Exception("A key should be provided to invoke the endpoint")

headers = {'Content-Type':'application/json', 'Authorization':('Bearer '+ api_key)}

req = urllib.request.Request(url, body, headers)

response = urllib.request.urlopen(req)

answer = response.read()

print('Answer:', answer)

Remember to update the placeholders:

- Replace YOUR_ENDPOINT_URL with the actual endpoint URL from your deployment details.

- Replace YOUR_API_KEY with your deployment’s API key.

Go to terminal and run:

python test.py

Here’s an example of the response you might receive. This indicates that the model is generating a continuation of the input text, showcasing its language generation capabilities.

PS D:\blog> python test.py

Question: the weather is fine today.

Answer: b'["the weather is fine today. I don\'t mind if it gets a little wet. A real rain would be"]'

Endpoint Details

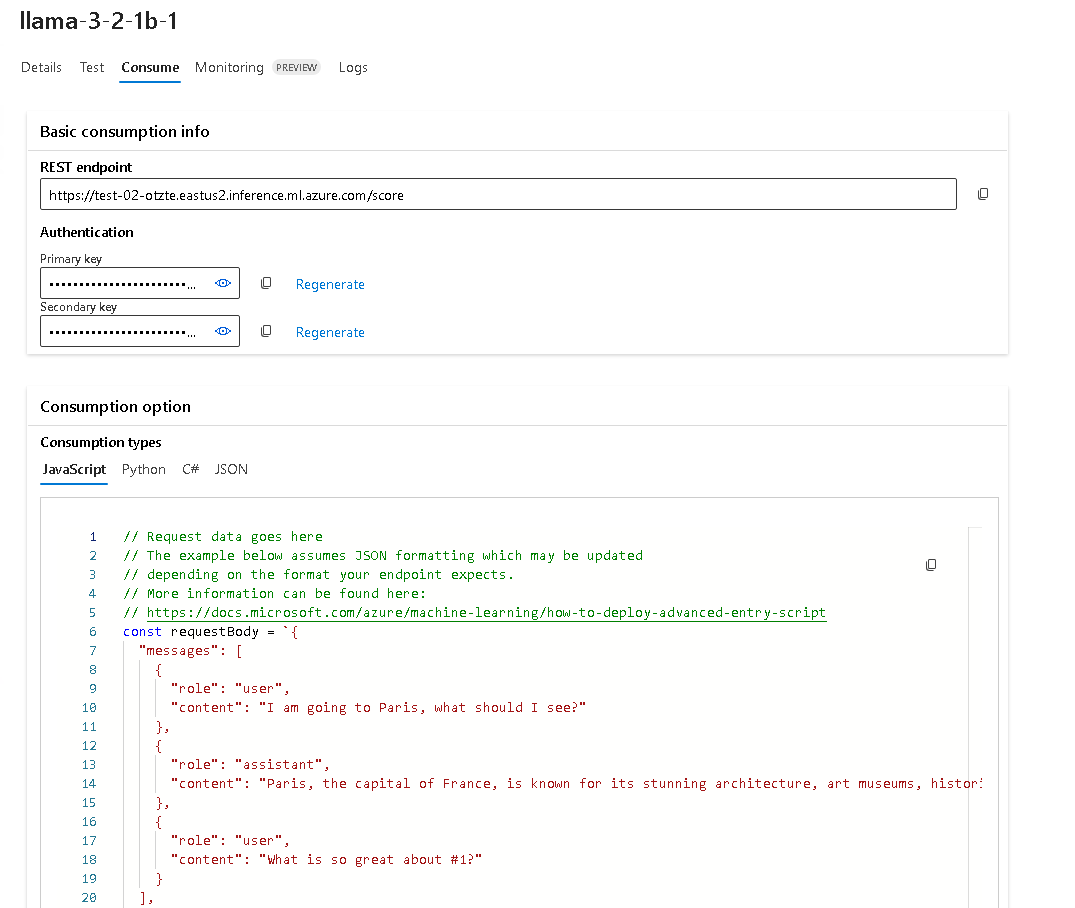

The components of your deployment endpoint are in the Consume tab.

- Rest Endpoint: The URL where your model is hosted. This is used to send requests to the model.

- API Key: A security token required to authenticate requests to the endpoint.

Note: the code in the Consumption option might not 100% suitable to the LLM model, you might need to revise the payload structure.

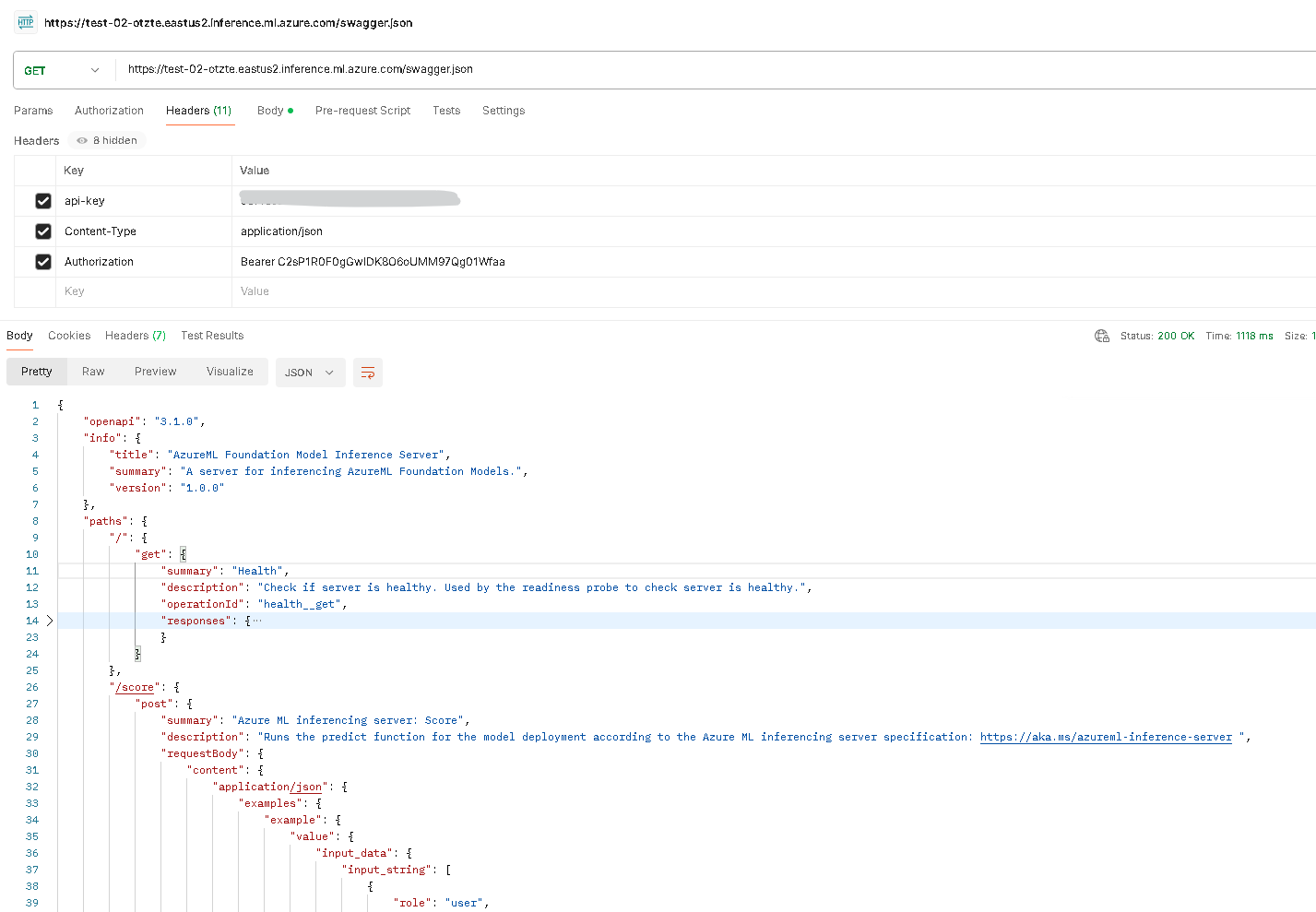

The swagger endpoint of the model looks like below.

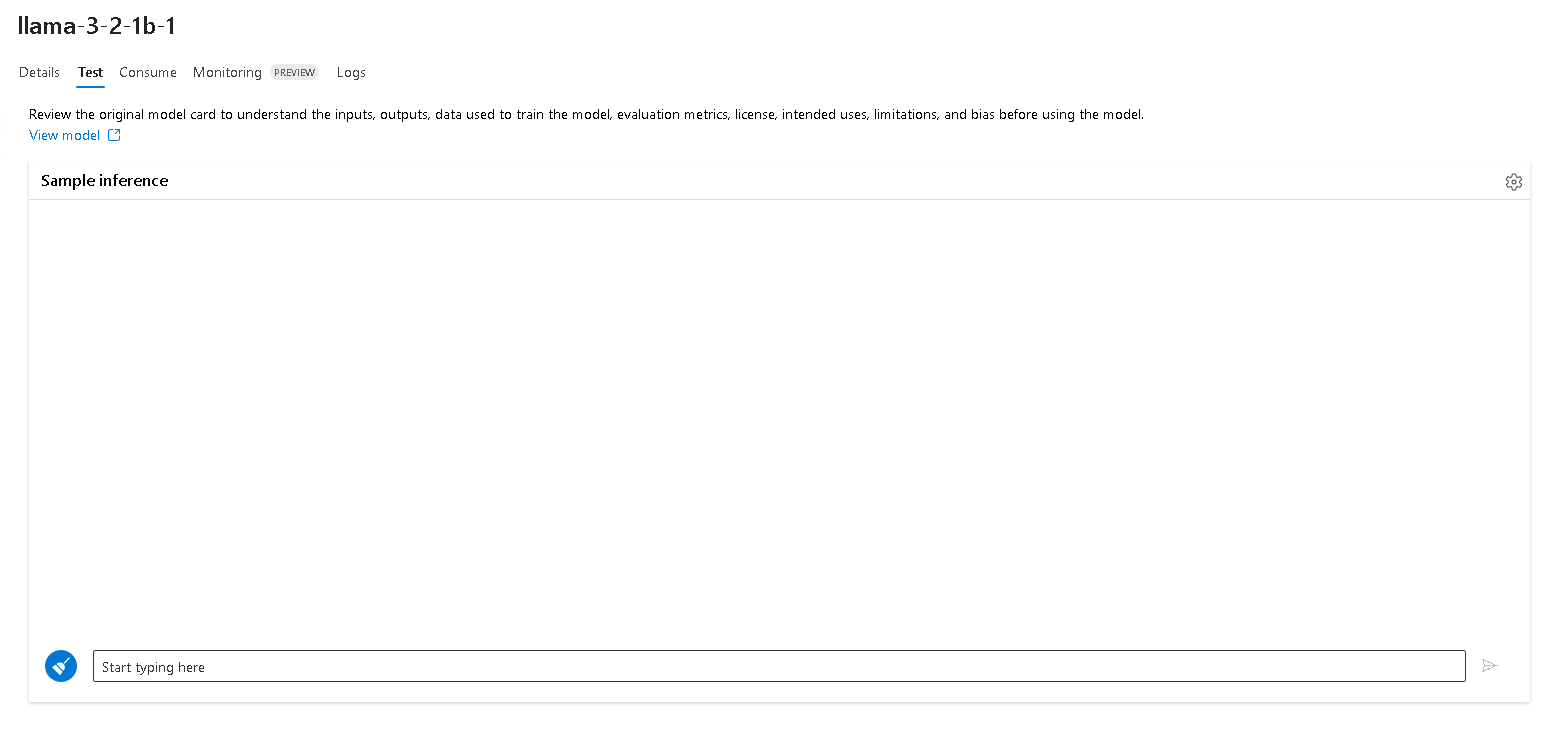

Test & Playground

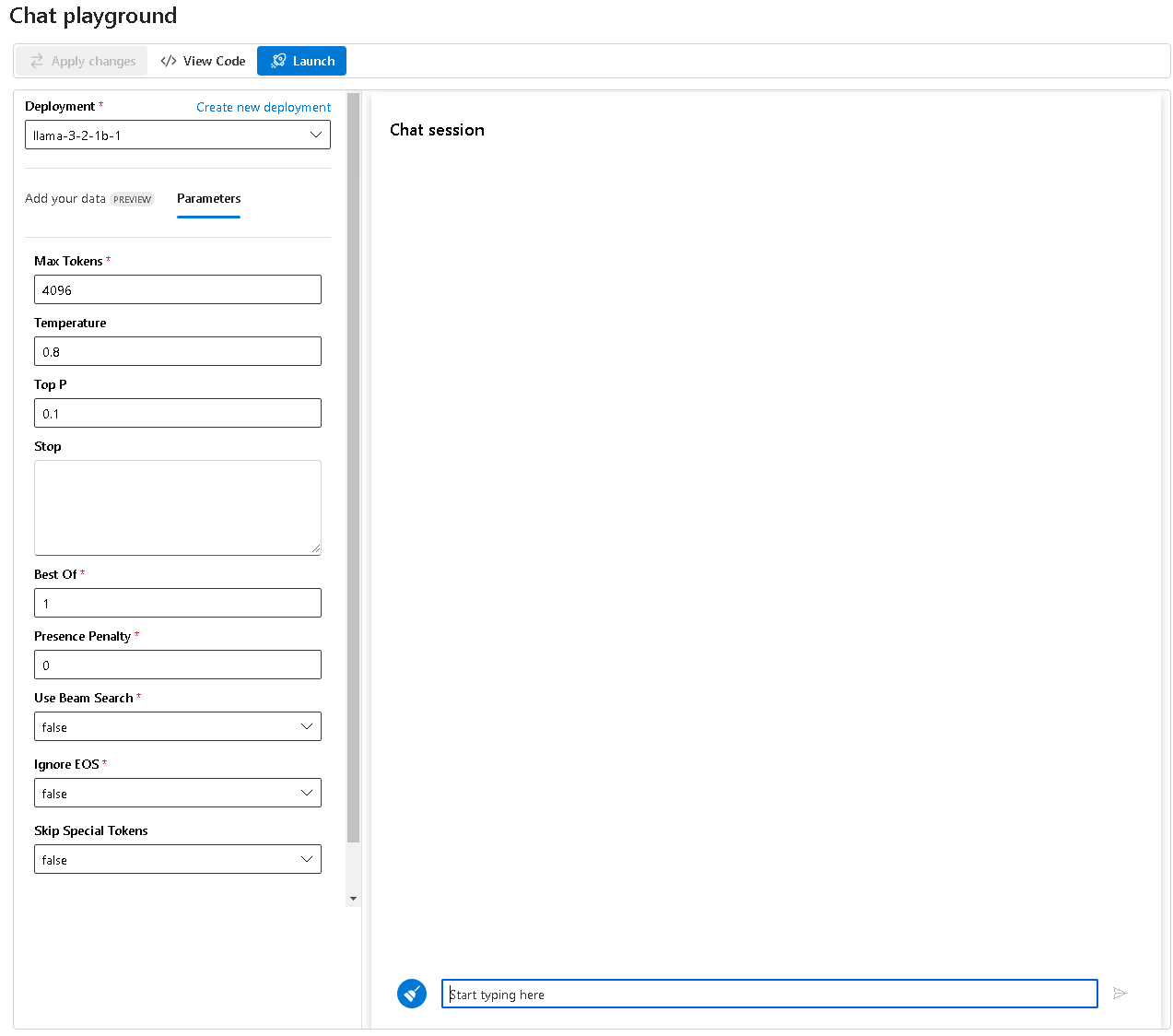

Azure AI Studio offers a Test & Playground feature to interact with your model directly from the browser.

You can also access the model in the Chat Playground.

Note: Certain models might not work directly in the Playground due to payload structure. If you get a 400 error, try calling API directly with correct payload for the model.

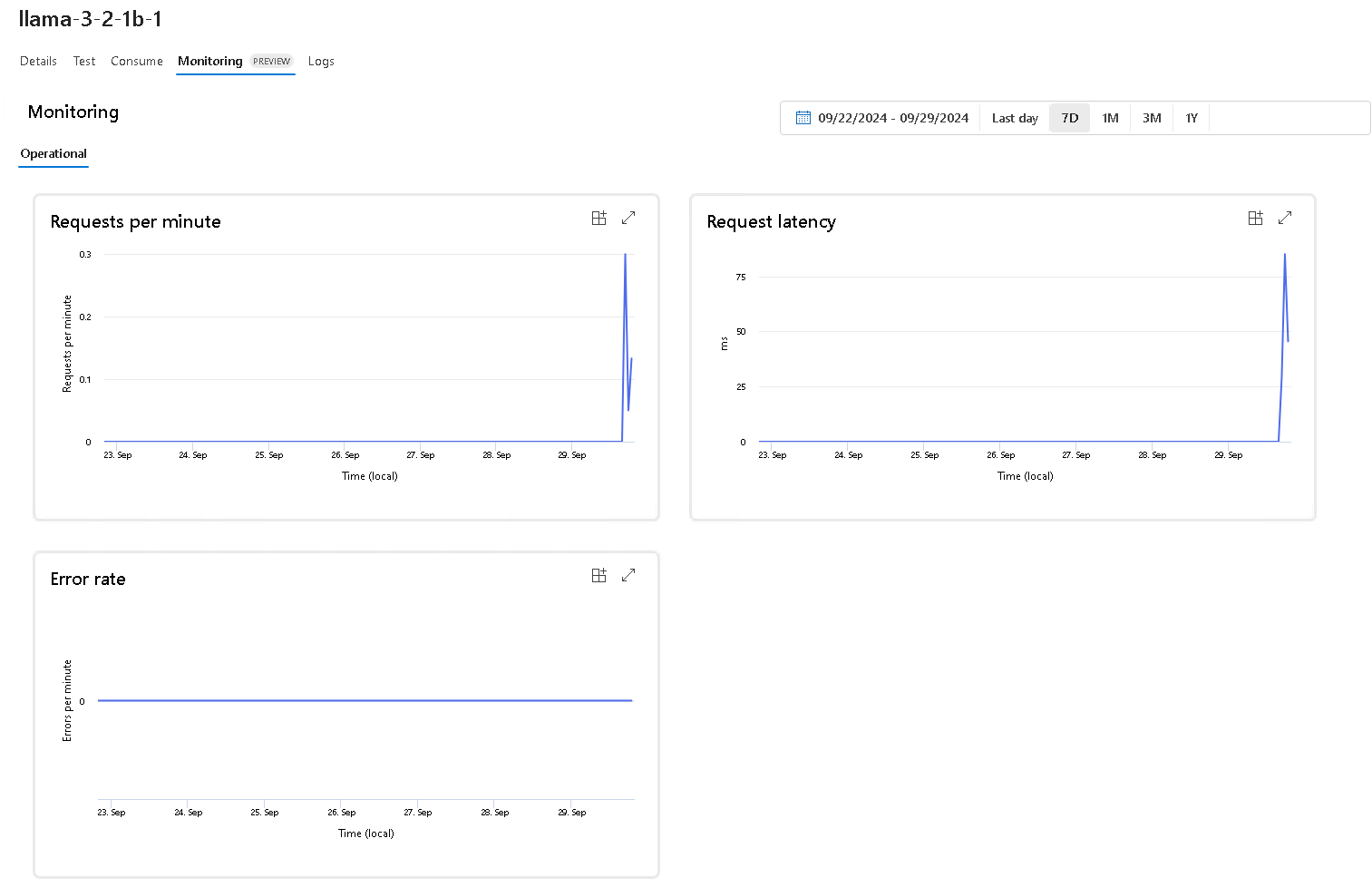

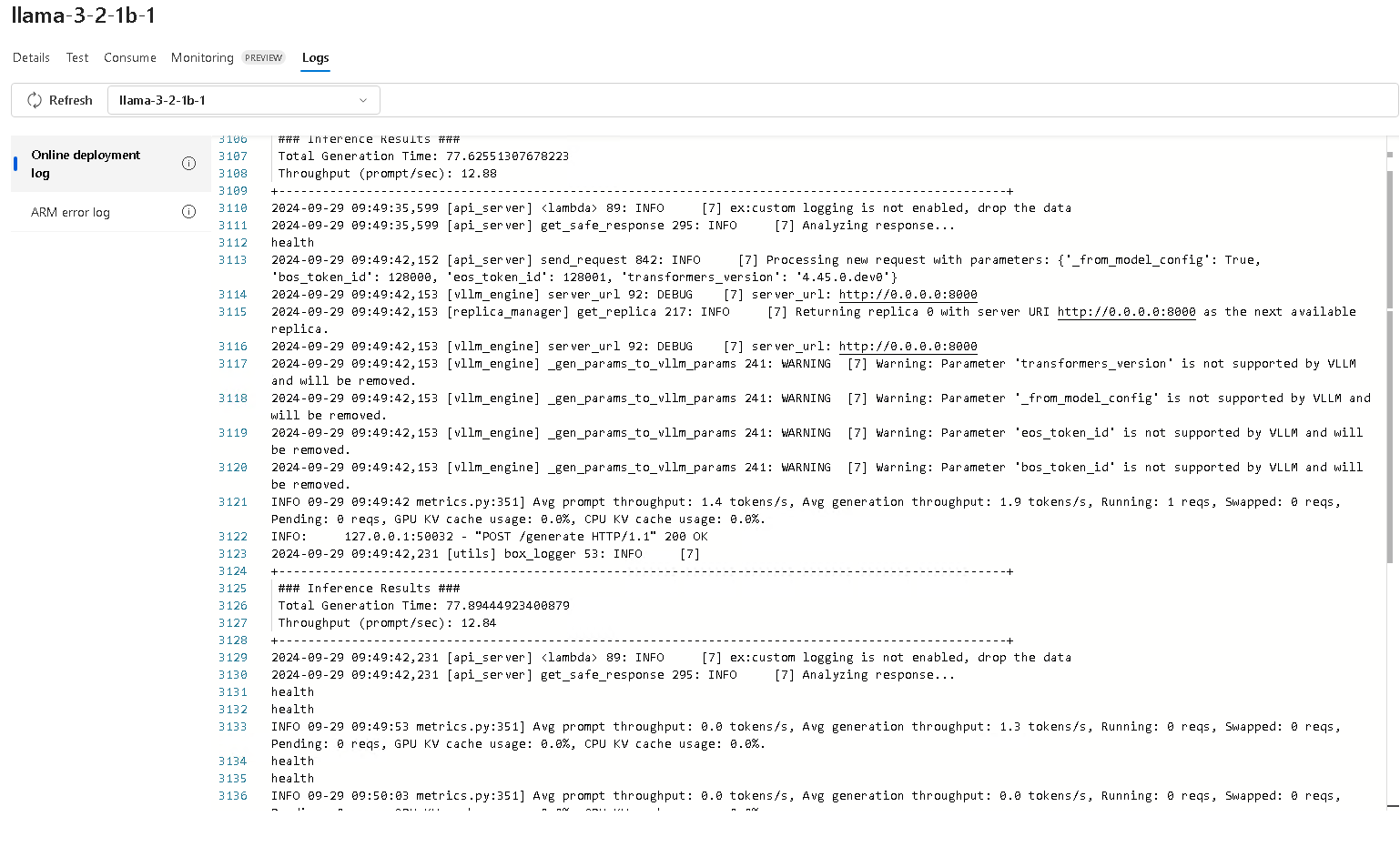

Monitoring and Logs

Monitoring tab provides stats for performance.

Use the log tab to trace the logs.

Conclusion

Congratulations! You’ve successfully deployed the Llama 3.2 model on Azure using Azure AI Studio. This setup allows you to harness the power of advanced language models within the scalable and secure environment of Azure. Whether you’re developing conversational AI, content generation tools, or other NLP applications, Azure AI Services provides a unified platform to deploy and manage your AI solutions efficiently.