Integrating Azure OpenAI Service Behind API Management Using SDK

Author:

Daniel Fang

Published: November 3, 2024

27 minutes to read

Integrating Azure OpenAI service with your existing systems and applications can streamline various AI-driven functionalities. By deploying the Azure OpenAI service behind Azure API Management (APIM), you can enhance security, manage API access, and optimize monitoring, all while making the service accessible across multiple languages and platforms. This guide provides a step by step guide of setting up this integration, along with sample code using CURL or SDKs in Python, Node.js and C#.

1 Setting Up

We need to deploy an Azure OpenAI Service and Azure API Management (APIM) first, then expose Azure OpenAI API via APIM.

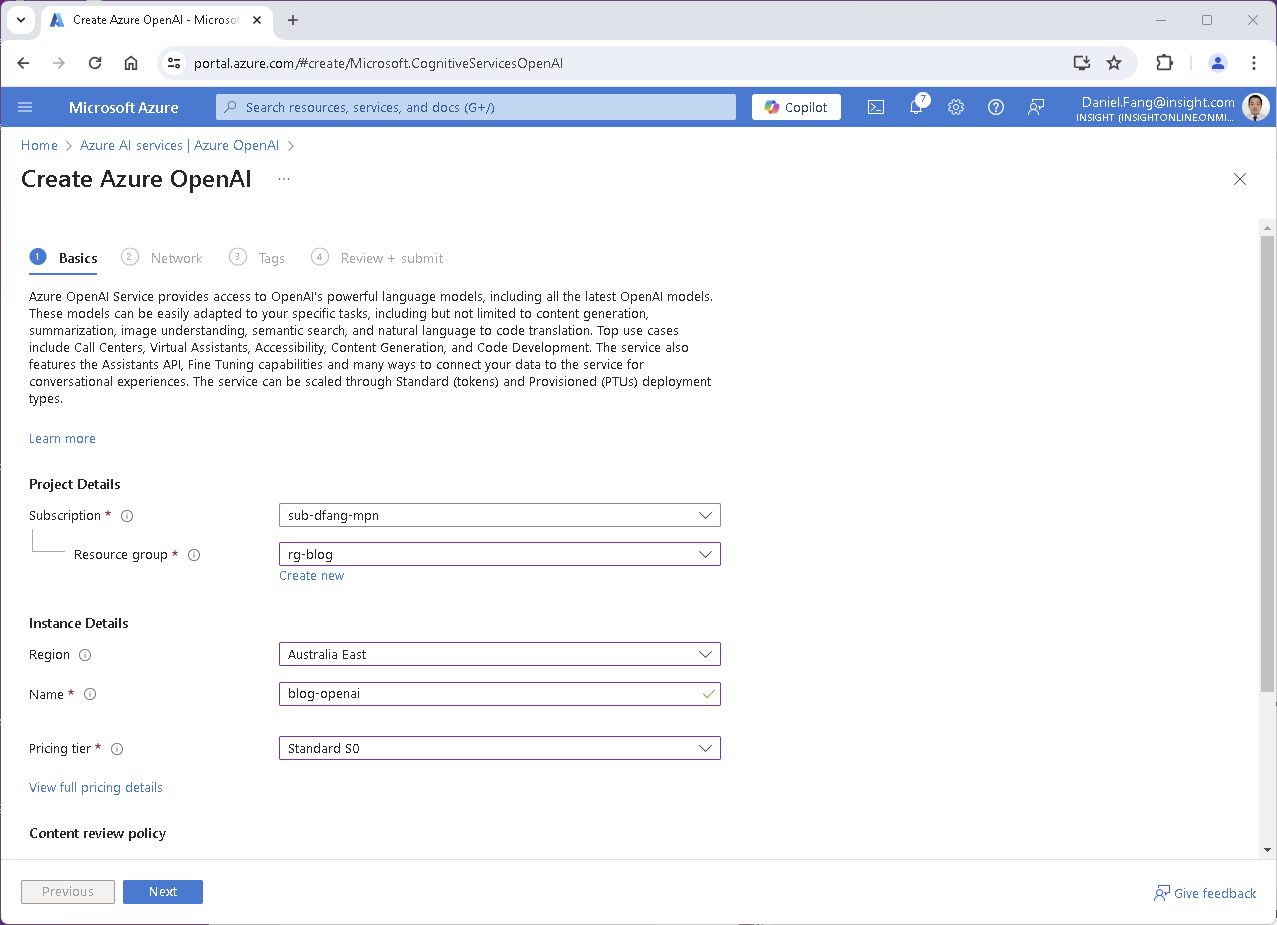

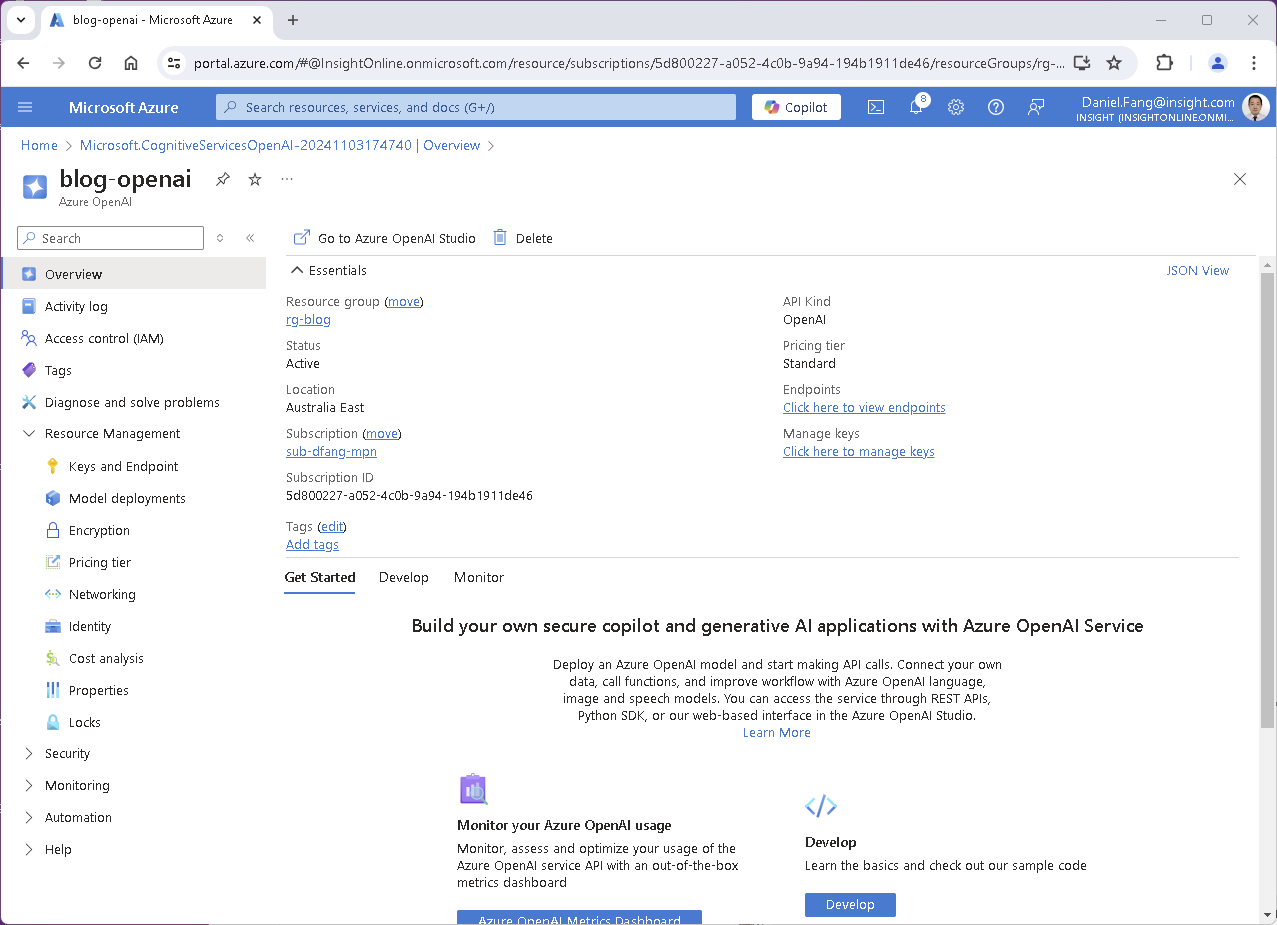

1.1 Deploy Azure OpenAI Service

Let’s briefly go over the setup steps to deploy Azure OpenAI Service.

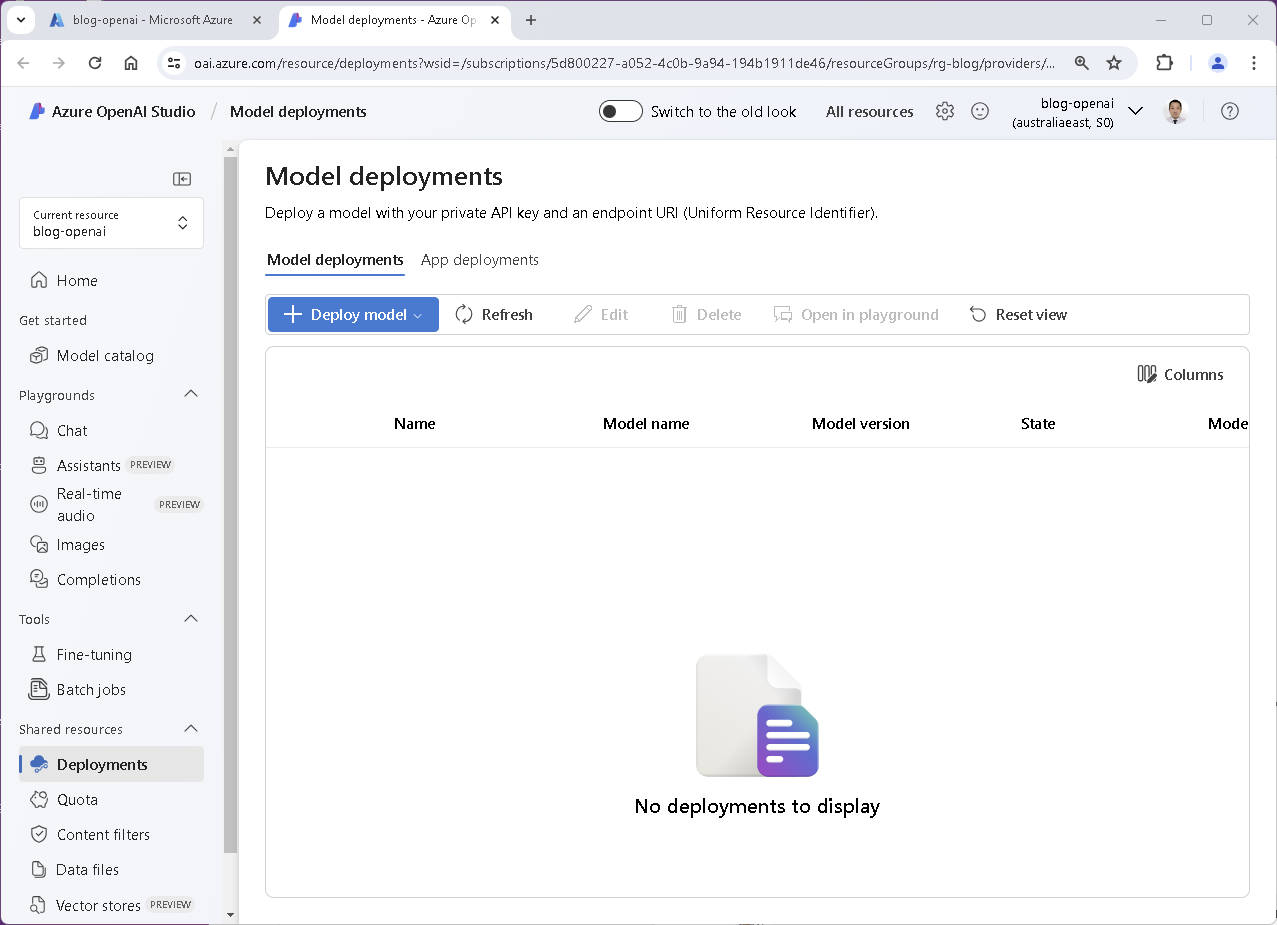

- Create an Azure OpenAI Service in the Azure Portal

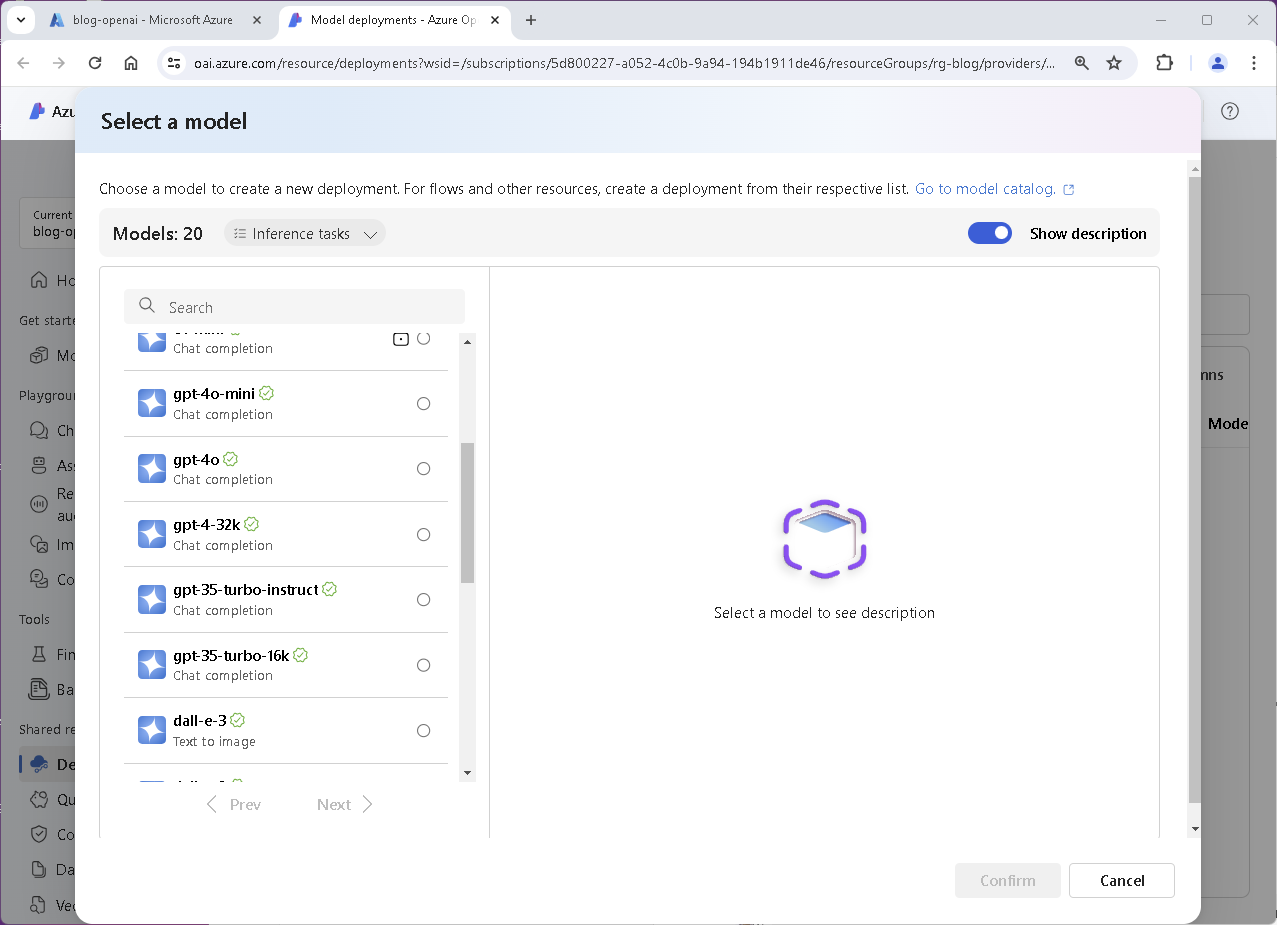

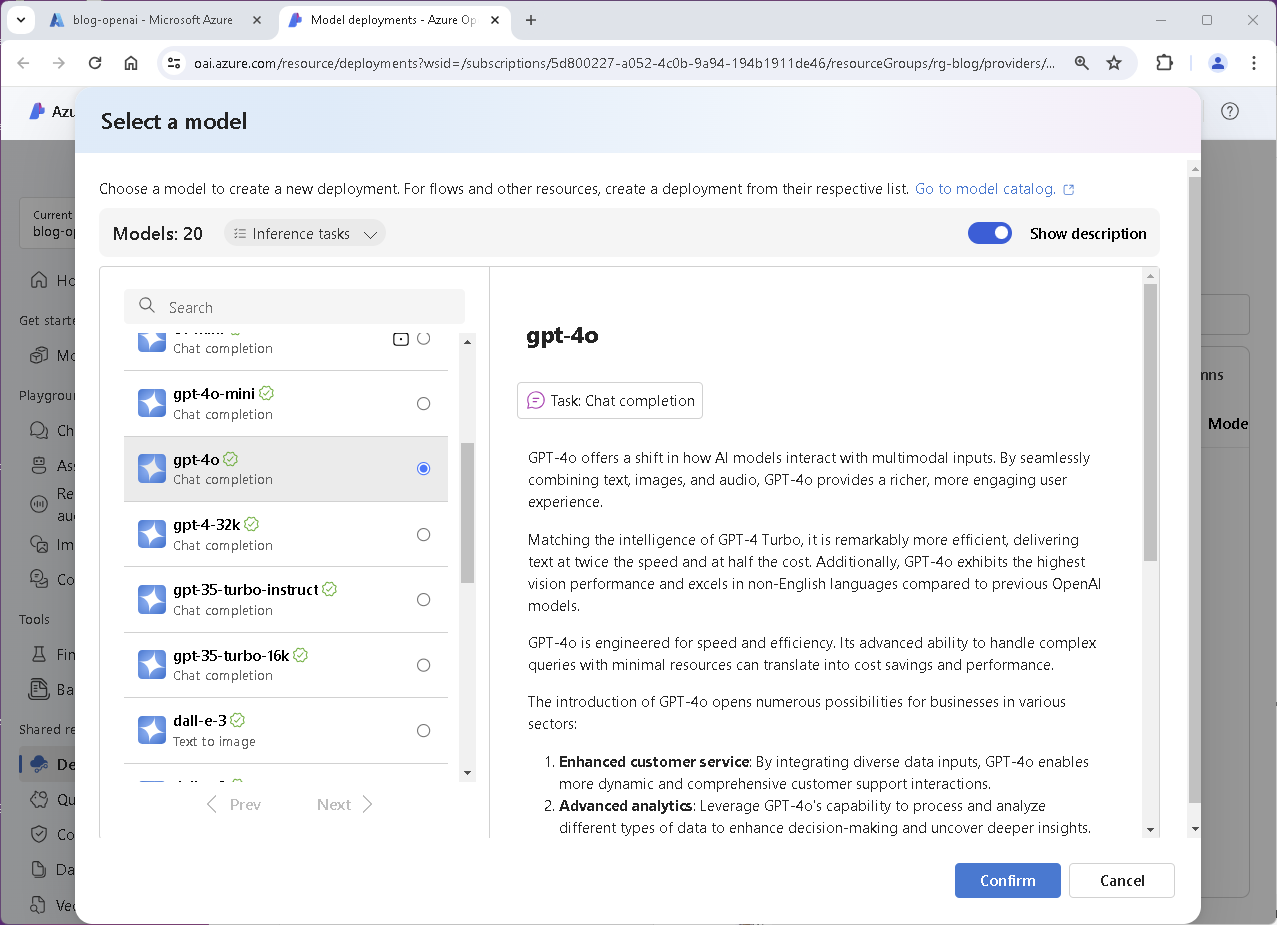

- Browse the list of OpenAI models and select

GPT-4o

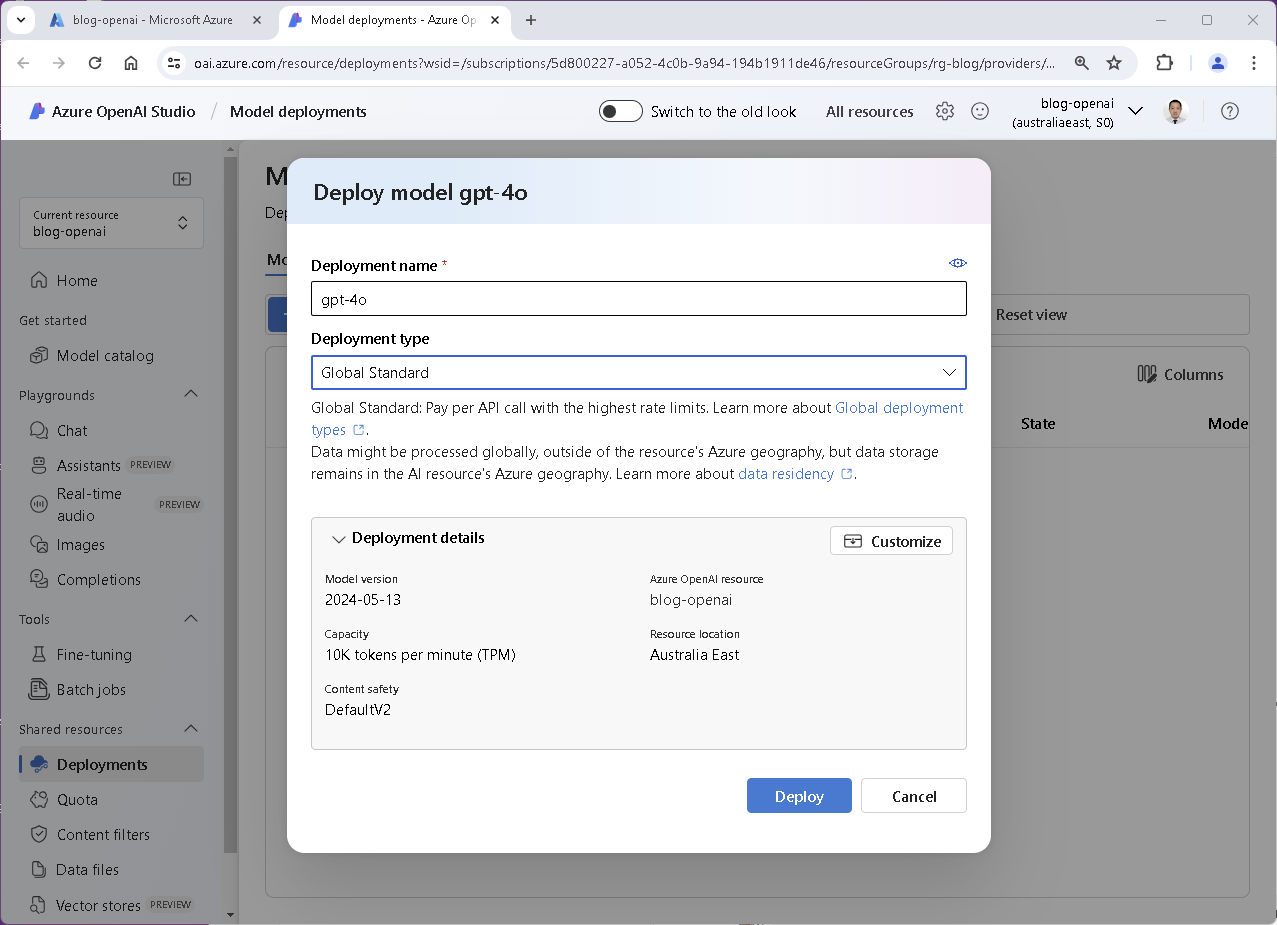

- Complete the details in the popup and deploy a

GPT-4oModel

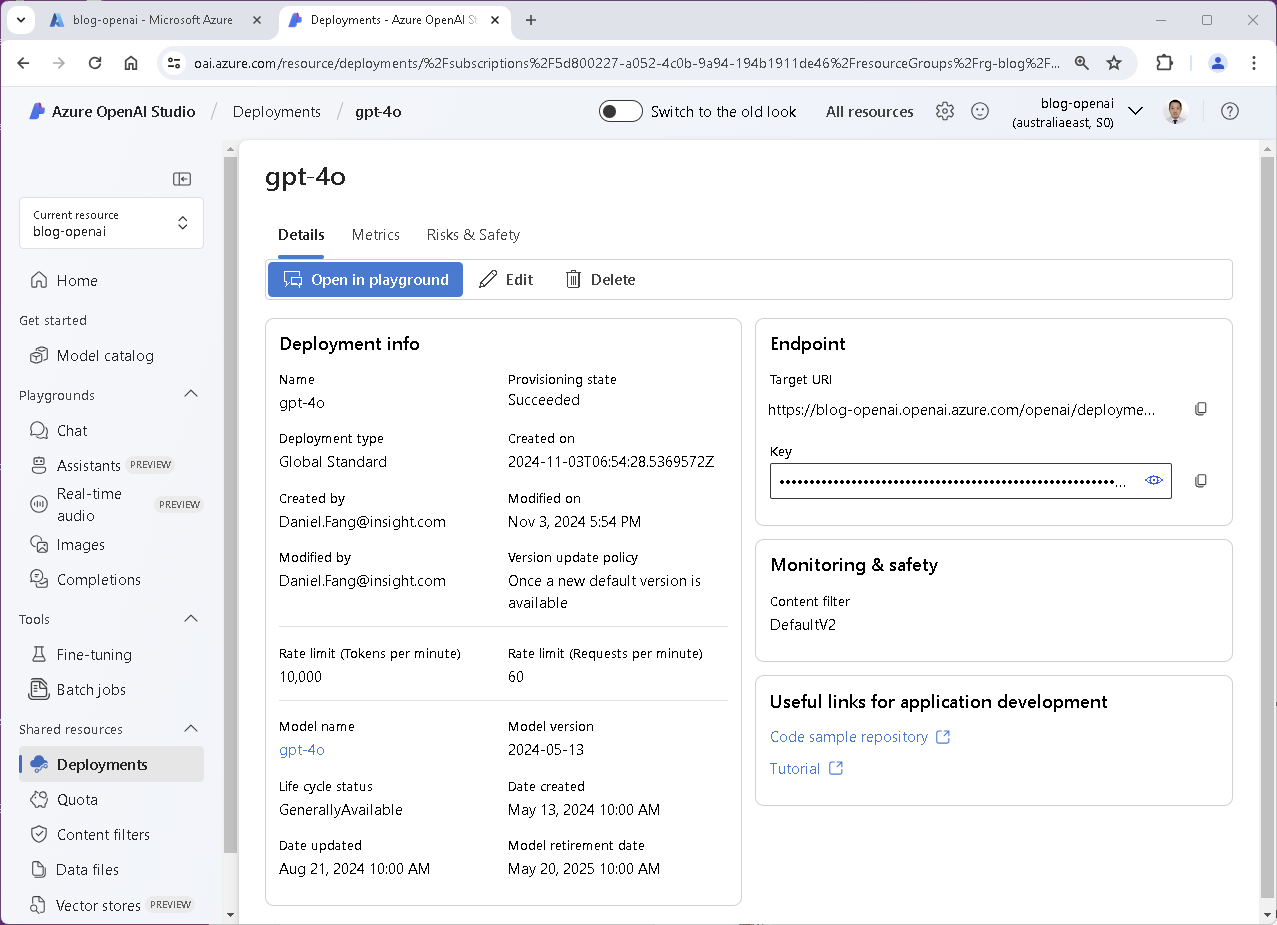

- Retrieve

GPT-4omodel endpoint details and api keys

1.2 Deploy Azure API Management

Azure API Management (APIM) is a powerful service for managing APIs at scale, offering capabilities like:

- Security: By placing your API behind APIM, you can enforce authentication and authorization, making sure only authorized users can access the OpenAI service.

- Rate Limiting & Quotas: Control the usage of your APIs by implementing rate limits and quotas, helping prevent abuse and managing costs.

- Logging & Monitoring: Gain insights into API usage, monitor performance, and diagnose issues.

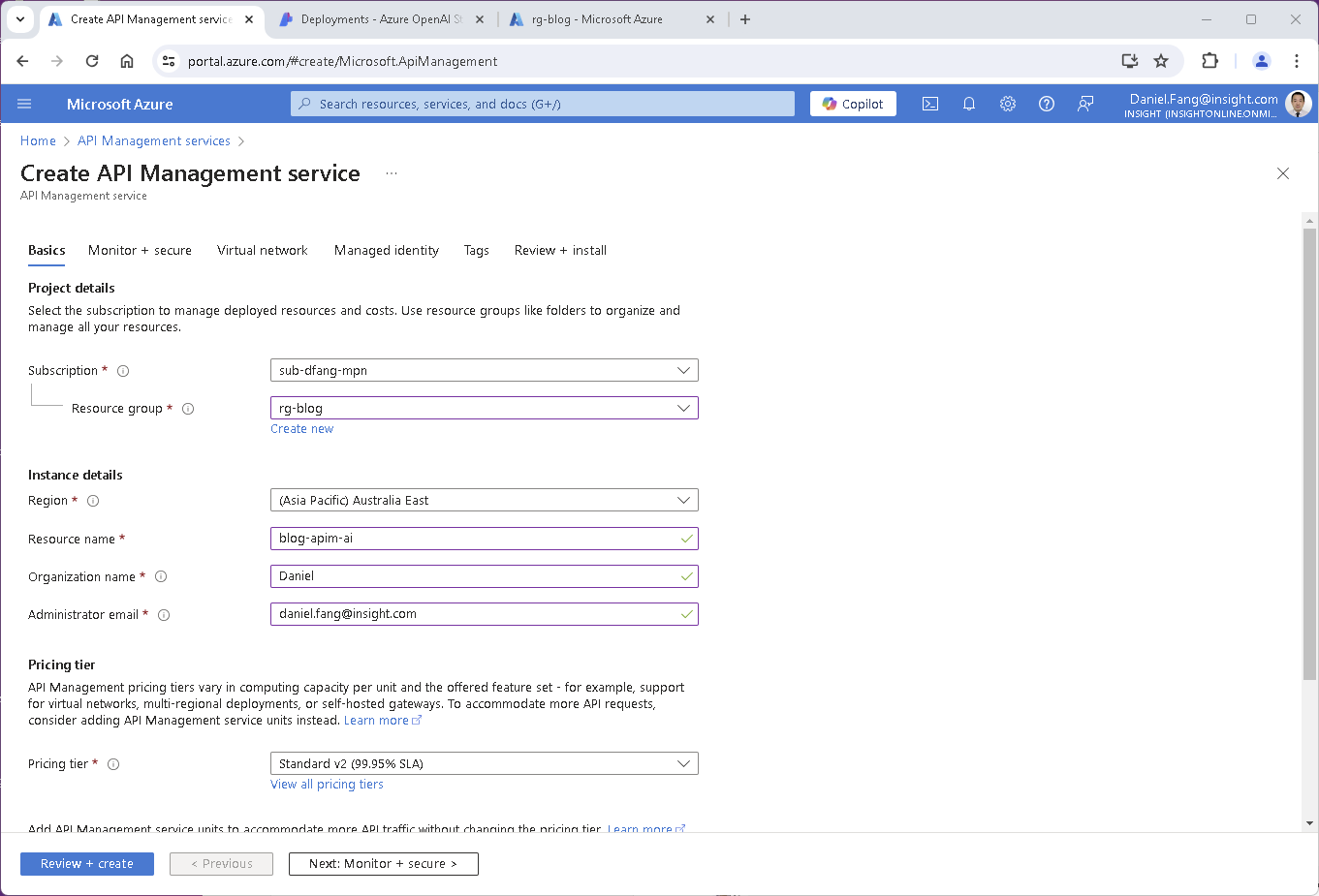

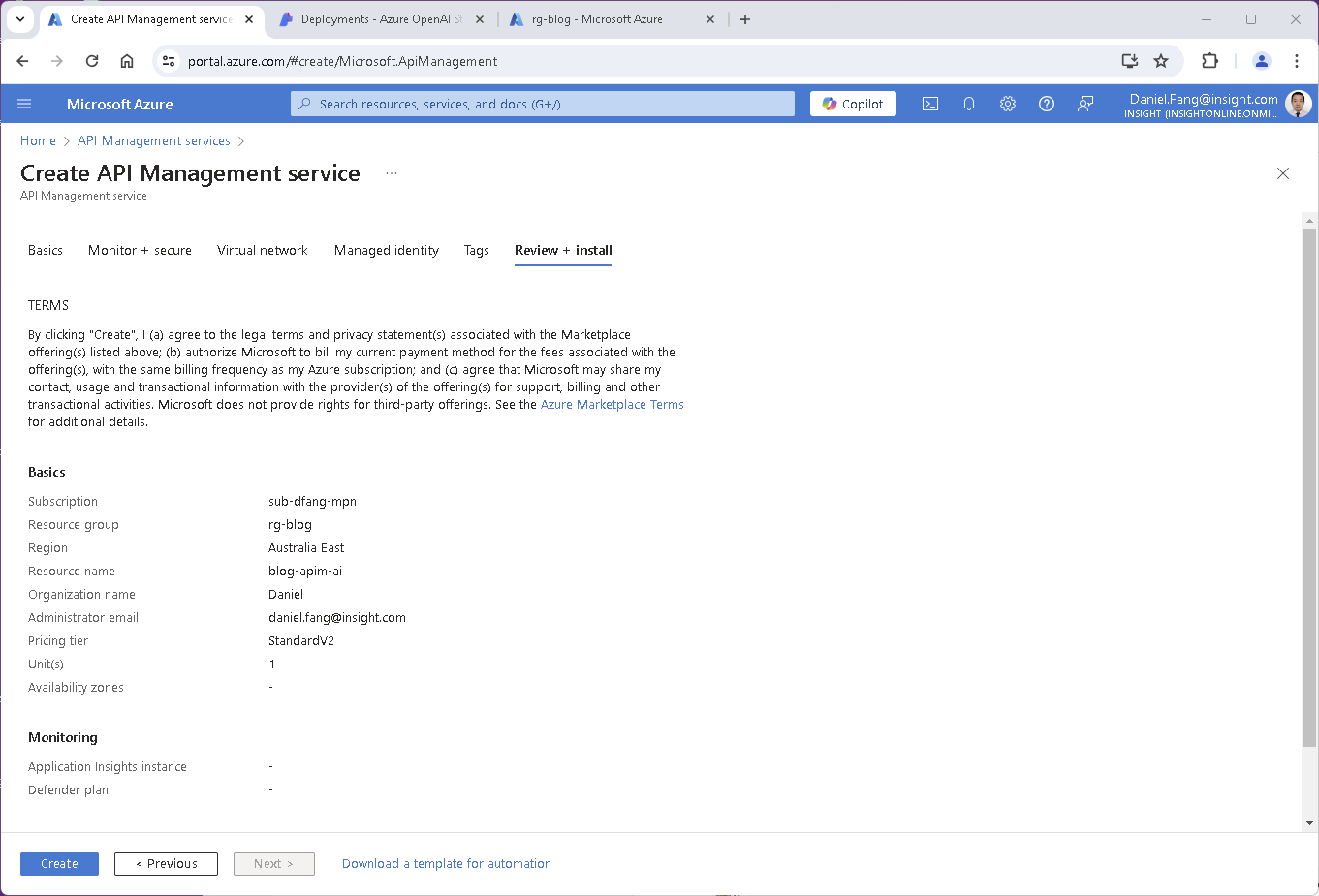

Let’s deploy an Azure API Management if you don’t have one already.

- Create Azure API Management deployment

1.3 Expose Azure OpenAI Endpoint in APIM

Great, we now have an Azure OpenAI service and also an APIM. It’s time to link them together.

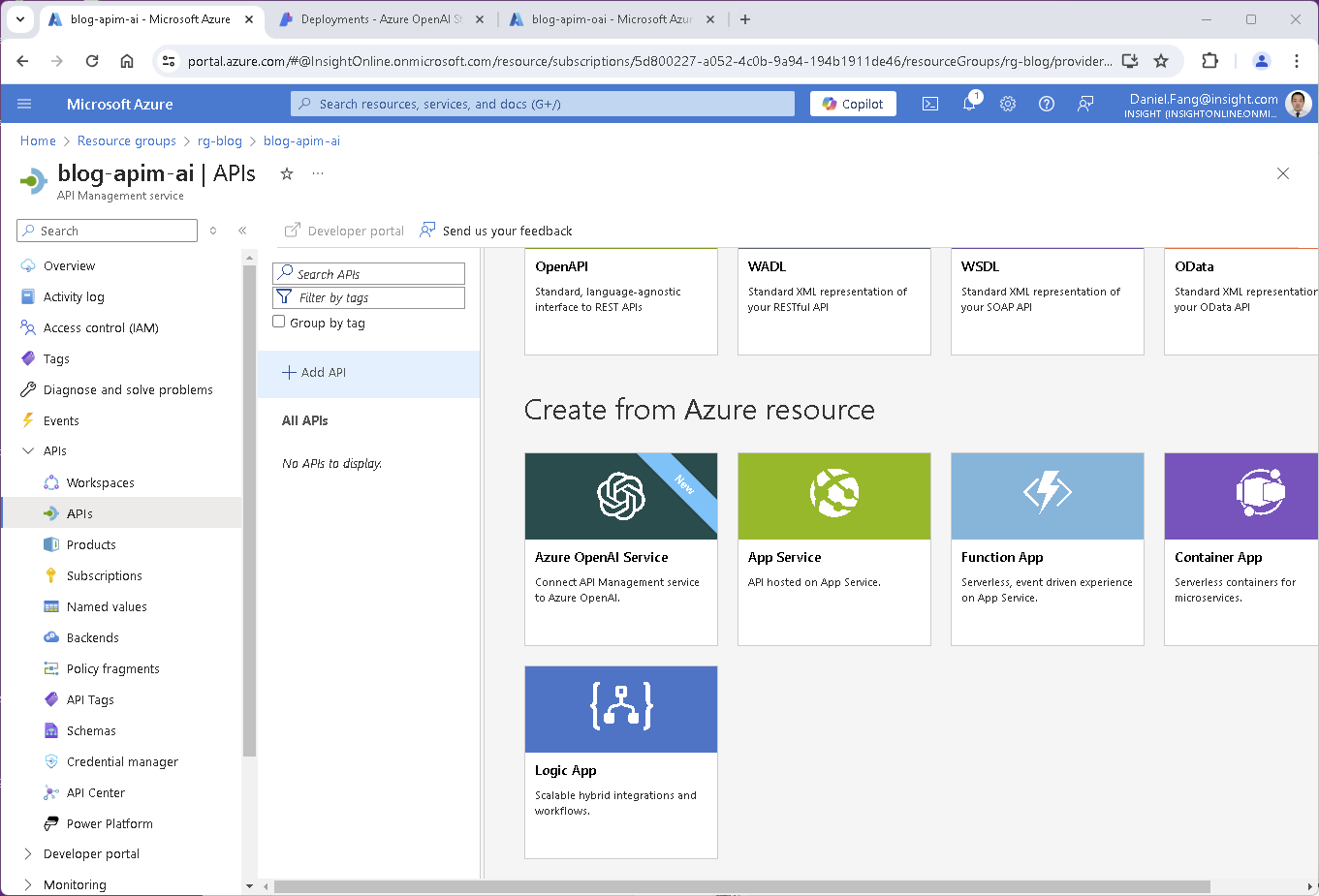

- From the

APIspage, look forCreate from Azure resource. There is an existing template for connecting APIM to an Azure OpenAI service.

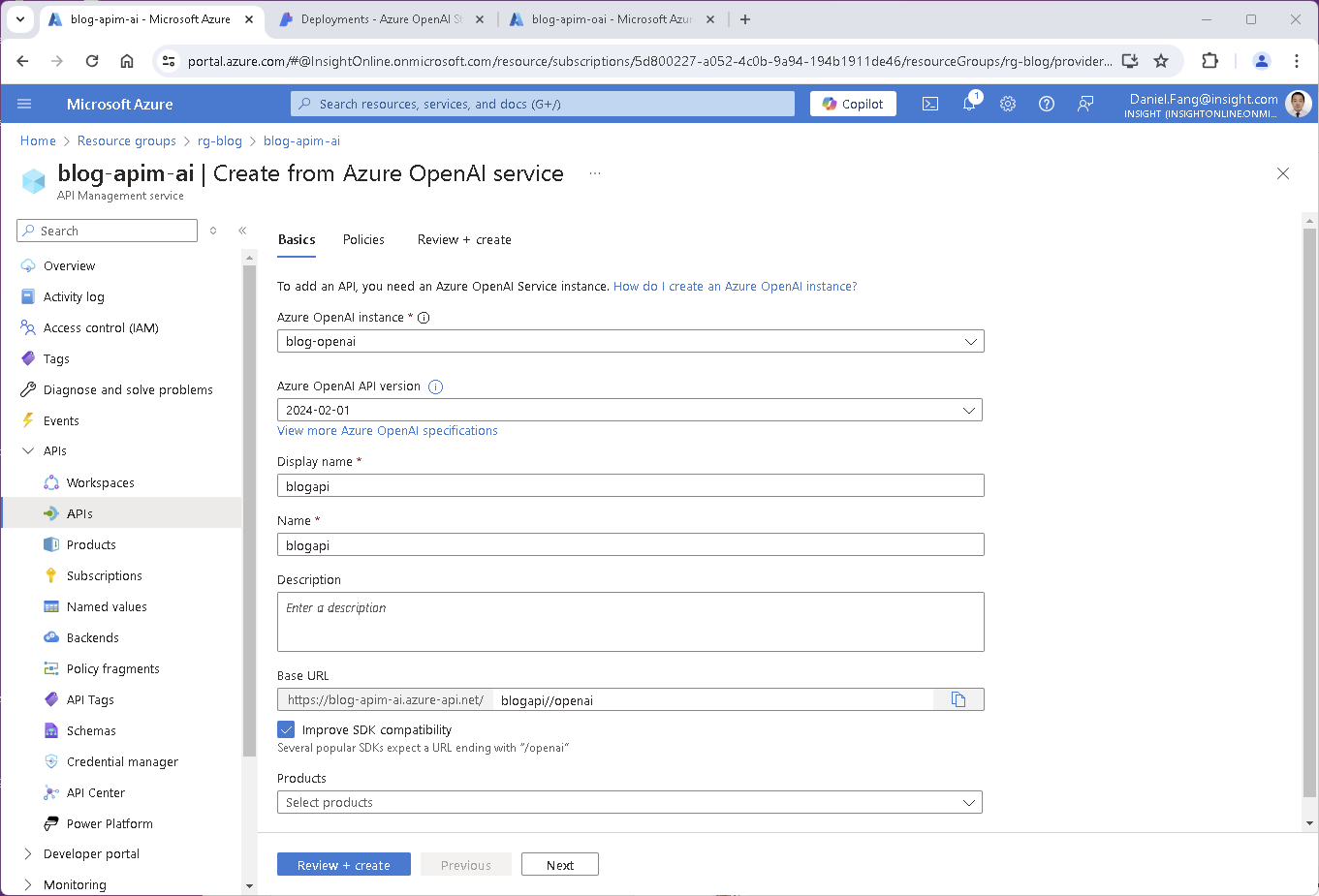

- Define the API details and associate it with Azure OpenAI service as backend.

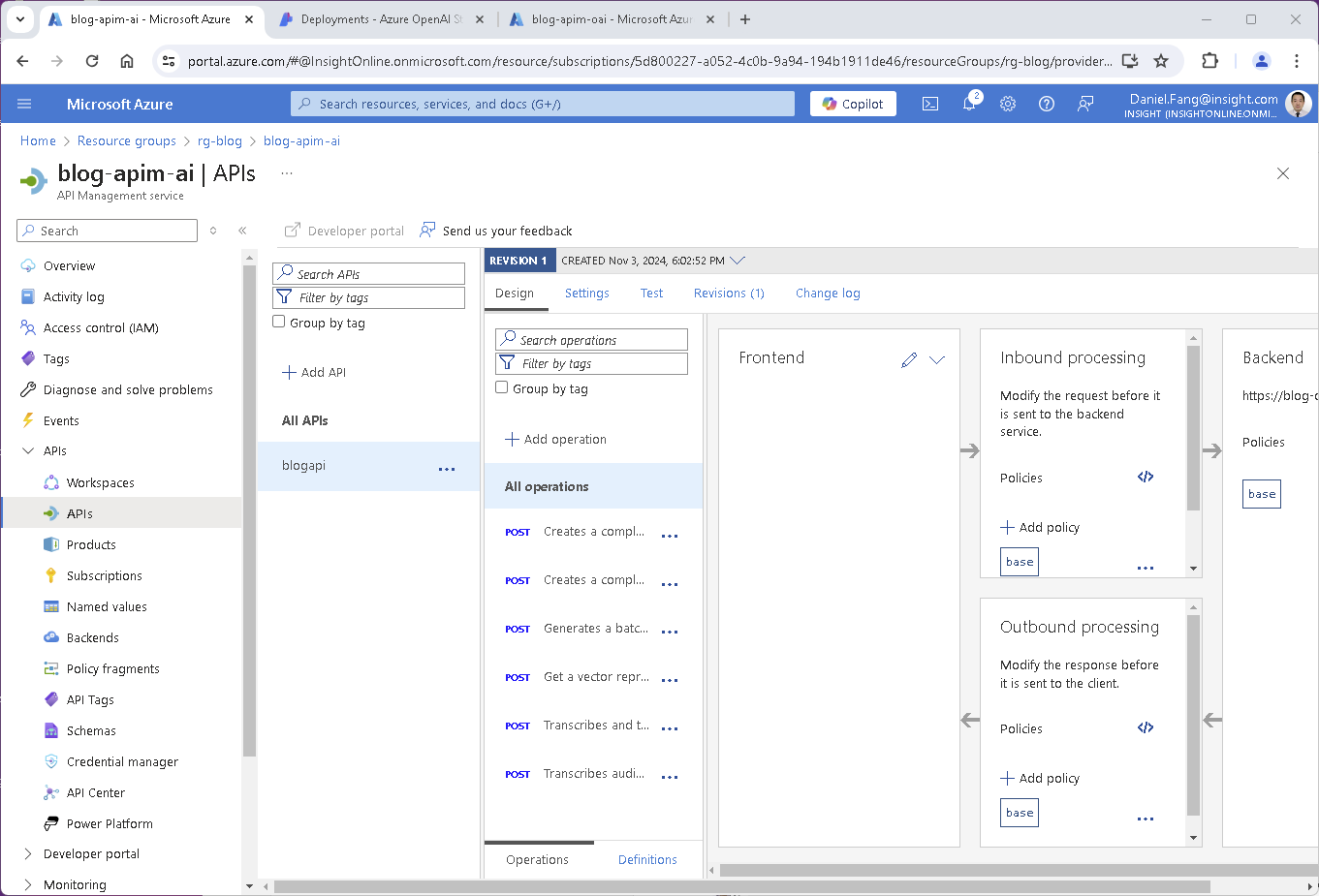

- Once API is deployed, you can see a list of OpenAI operations, like

chat completions. With APIM handling requests, you now have a secure, managed API endpoint that can be called from your applications.

- Go to

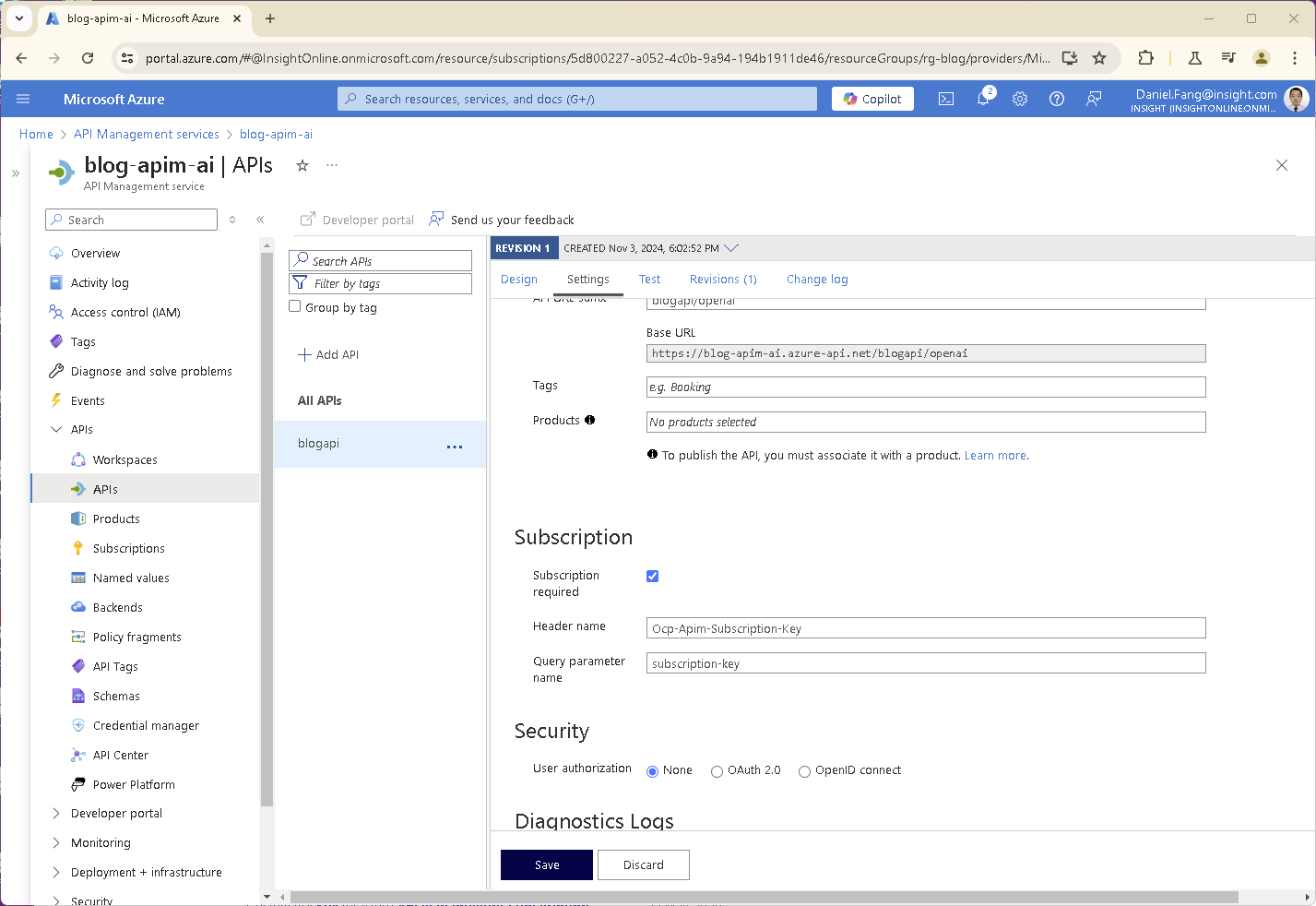

Settingstab to check theheader nameundersubscriptionsection. In this case, we can see APIM subscription key header isOcp-Apim-Subscription-Key. We will use this header late for API calls.

- Finally, navigate to

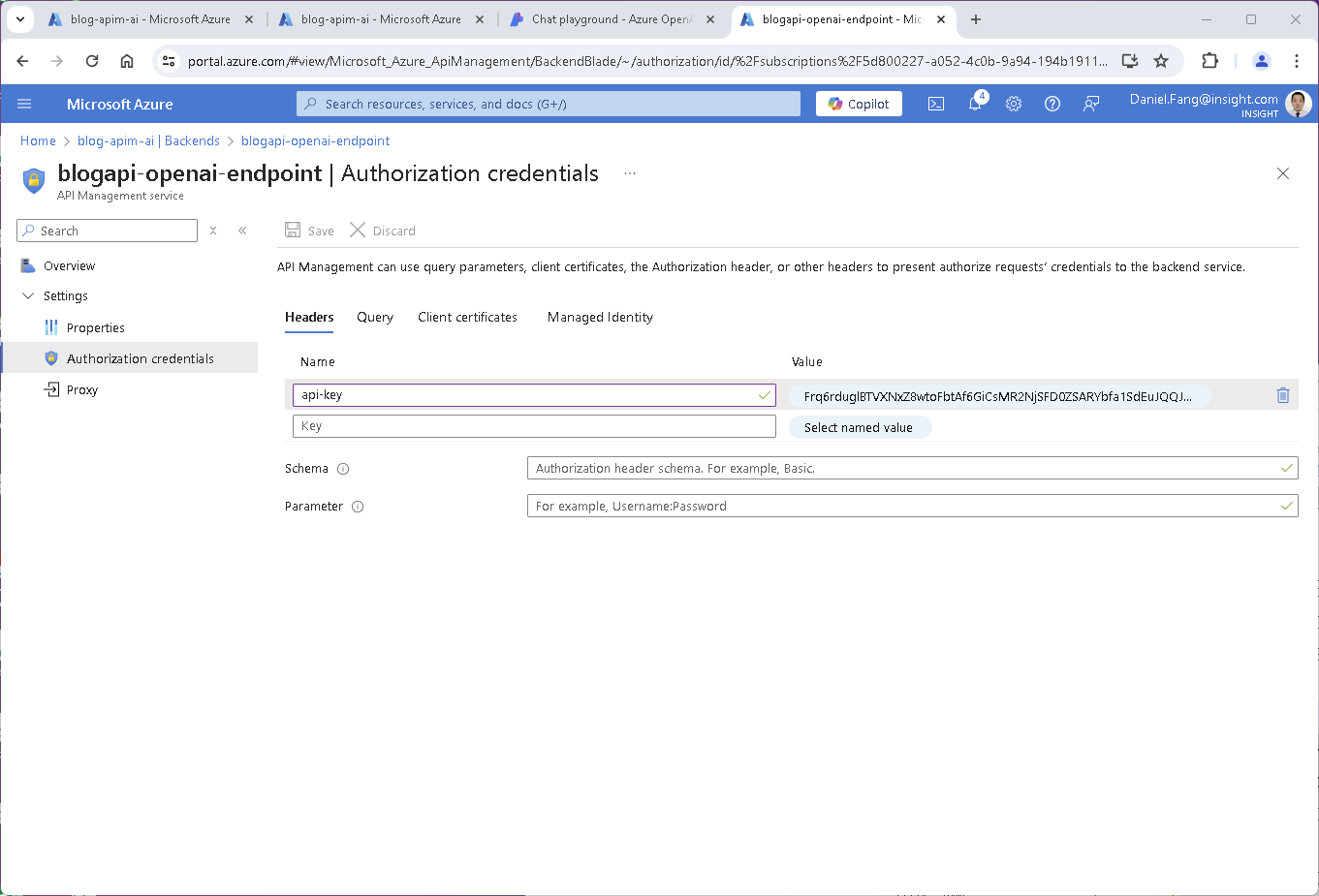

Backendstab and verify the Azure OpenAI backend settings. The backend should have Azure OpenAI Service endpoint URL asRuntime URLandapi-keyheader as below. Addapi-keyand value to the header if they are missing.

2 Use CURL to Call API

In this blog, the client app’s OpenAI interaction looks like below:

- Client app calls APIM using APIM’s subscription key via

Ocp-Apim-Subscription-Keyheader - APIM will validate subscription key before passing the request to Azure OpenAI service

- When passing the request, APIM policy will add

api-keyheader with Azure OpenAI service’s apikey via backend - Finally, Azure OpenAI service will validate its apikey and process incoming payload

When interacting with Azure OpenAI service directly, your requests will generally include:

- Azure OpenAI Endpoint: Your Azure OpenAI endpoint is in this format

https://<azure-openai-name>.azure-api.net/openai/deployments/<deployment-id>/completions. For example,https://blog-openai.openai.azure.com/openai/deployments/gpt-4o/chat/completions?api-version=2024-08-01-preview. - Azure OpenAI API Key: Your Azure OpenAI api key for authentication. it uses

api-keyheader.

When interacting with Azure OpenAI service behind APIM, your requests will generally include:

- Azure APIM Endpoint: Your Azure APIM endpoint for Azure OpenAI api is in this format

https://<apim-instance>.azure-api.net/<api-name>. For example,https://blog-apim-ai.azure-api.net/blogapi - Azure APIM Subscription Key: Your Azure APIM subscription key for Azure OpenAI api. it uses

Ocp-Apim-Subscription-Keyheader. - Azure OpenAI Endpoint: Your Azure OpenAI endpoint, this is configured in the APIM backend.

- Azure OpenAI API Key: Your Azure OpenAI api key, this is configured in the APIM backend. it uses

api-keyheader.

2.1 CURL to call Azure OpenAI

The Restful API call to Azure OpenAI service directly is simple, we only need api-key header and body. Be aware, the url and api-key is the Azure OpenAI endpoint and key (not APIM’s).

curl -i --location 'https://blog-openai.openai.azure.com/openai/deployments/gpt-4o/chat/completions?api-version=2024-08-01-preview' \

--header 'Content-Type: application/json' \

--header 'api-key: Frq6rduglBTVXNxZ8wtoFbtAfxxxxx' \

--data '{

"messages": [

{

"role": "system",

"content": [

{

"type": "text",

"text": "You are a helpful assistant who talks like a pirate."

}

]

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "Good day, who am I talking to?"

}

]

},

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "Ahoy there, matey! What be bringin ye to these waters today? "

}

]

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "Where is the treasure island?"

}

]

}

],

"temperature": 0.7,

"top_p": 0.95,

"max_tokens": 800

}'

The response looks like below. If you inspect the response header, it is very interesting to see that there is an apim-request-id header. This is an indication that Azure OpenAI service natively is behind an global APIM (not your APIM).

Arrr, ye be searchin' fer the legendary Treasure Island, eh? Well, it be said that the island lies hidden in the vast expanses of the Seven Seas, shrouded in mystery and guarded by fearsome creatures. Some say it’s marked on an ancient map, torn in two, with one half held by Captain Flint’s ghost and the other by a one-legged man. Keep yer compass steady and yer wits about ye, and maybe, just maybe, ye’ll find that fabled isle. Fair winds and following seas, matey!

HTTP/2 200

content-length: 1324

content-type: application/json

apim-request-id: bea1796b-ad97-45dc-a586-b31cd1e94b1b

strict-transport-security: max-age=31536000; includeSubDomains; preload

x-ratelimit-remaining-requests: 9

x-accel-buffering: no

x-ms-rai-invoked: true

x-request-id: 3d9919b0-bb1b-4ca4-a3d7-3d67d2ae1264

x-content-type-options: nosniff

azureml-model-session: d112-20240926064409

x-ms-region: Australia East

x-envoy-upstream-service-time: 1136

x-ms-client-request-id: bea1796b-ad97-45dc-a586-b31cd1e94b1b

x-ratelimit-remaining-tokens: 9154

date: Sat, 09 Nov 2024 03:27:31 GMT

{"choices":[{"content_filter_results":{"hate":{"filtered":false,"severity":"safe"},"protected_material_code":{"filtered":false,"detected":false},"protected_material_text":{"filtered":false,"detected":false},"self_harm":{"filtered":false,"severity":"safe"},"sexual":{"filtered":false,"severity":"safe"},"violence":{"filtered":false,"severity":"safe"}},"finish_reason":"stop","index":0,"logprobs":null,"message":{"content":"Arrr, ye be seekin' Treasure Island, do ye? Legend has it, the fabled isle be hidden in the vast seas, marked only on the rarest of maps. Some say it lies in the Caribbean, others whisper it be in the South Seas. Best be keepin' a sharp eye out fer clues and followin' the stars, matey! May fair winds guide yer sails to the riches ye seek!","role":"assistant"}}],"created":1731122851,"id":"chatcmpl-ARWQFIfWeKIJNuaCIX4wurpG8AHDn","model":"gpt-4o-2024-05-13","object":"chat.completion","prompt_filter_results":[{"prompt_index":0,"content_filter_results":{"hate":{"filtered":false,"severity":"safe"},"jailbreak":{"filtered":false,"detected":false},"self_harm":{"filtered":false,"severity":"safe"},"sexual":{"filtered":false,"severity":"safe"},"violence":{"filtered":false,"severity":"safe"}}}],"system_fingerprint":"fp_67802d9a6d","usage":{"completion_tokens":88,"prompt_tokens":63,"total_tokens":151}}

2.2 CURL to call Azure OpenAI Behind APIM

When the Azure OpenAI is sitting behind APIM, the http request looks pretty similar. The only differences are below:

urlis now Azure APIM url.subscription key headerisOcp-Apim-Subscription-Keywith a APIM subscription key. APIM will set Azure OpenAI’sapi-keyin the APIM policy viabackendconfiguration under the scene.

curl -i --location 'https://blog-apim-ai.azure-api.net/blogapi/openai/deployments/gpt-4o/chat/completions?api-version=2024-04-01-preview' \

--header 'Content-Type: application/json' \

--header 'Ocp-Apim-Subscription-Key: 23543f01081049e4axxxx' \

--data '{

"messages": [

{

"role": "system",

"content": [

{

"type": "text",

"text": "You are a helpful assistant who talks like a pirate."

}

]

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "Good day, who am I talking to?"

}

]

},

{

"role": "assistant",

"content": [

{

"type": "text",

"text": "Ahoy there, matey! What be bringin ye to these waters today? "

}

]

},

{

"role": "user",

"content": [

{

"type": "text",

"text": "Where is the treasure island?"

}

]

}

],

"temperature": 0.7,

"top_p": 0.95,

"max_tokens": 800

}'

The response looks like below. It is basically same as calling Azure OpenAI directly. If you inspect the headers in the response, the only difference is that HTTP is now HTTP/1.1 rather than HTTP/2.

Arrr, the treasure island be a legendary place, often spoken of in tales 'n songs o' the sea. Some say it be hidden in the Caribbean, others claim it lies in the South Seas. Ye best be havin' a map or clues from an old sea dog to find it. Beware, though, for many a pirate has lost his way searchin' for that fabled isle!

HTTP/1.1 200 OK

Content-Length: 1305

Content-Type: application/json

Date: Sat, 09 Nov 2024 03:26:23 GMT

Strict-Transport-Security: max-age=31536000; includeSubDomains; preload

apim-request-id: d0ff2959-70e3-4b55-8bf0-5144bd0aa6b1

x-ratelimit-remaining-requests: 9

x-accel-buffering: no

x-ms-rai-invoked: true

X-Request-ID: b01f01c9-f001-4a33-9aec-347ad5119f6f

X-Content-Type-Options: nosniff

azureml-model-session: d100-20240925160025

x-ms-region: Australia East

x-envoy-upstream-service-time: 1049

x-ms-client-request-id: d0ff2959-70e3-4b55-8bf0-5144bd0aa6b1

x-ratelimit-remaining-tokens: 7462

{"choices":[{"content_filter_results":{"hate":{"filtered":false,"severity":"safe"},"protected_material_code":{"filtered":false,"detected":false},"protected_material_text":{"filtered":false,"detected":false},"self_harm":{"filtered":false,"severity":"safe"},"sexual":{"filtered":false,"severity":"safe"},"violence":{"filtered":false,"severity":"safe"}},"finish_reason":"stop","index":0,"logprobs":null,"message":{"content":"Arrr, the treasure island be a legendary place, often spoken of in tales 'n songs o' the sea. Some say it be hidden in the Caribbean, others claim it lies in the South Seas. Ye best be havin' a map or clues from an old sea dog to find it. Beware, though, for many a pirate has lost his way searchin' for that fabled isle!","role":"assistant"}}],"created":1731122782,"id":"chatcmpl-ARWP8ncGhGUFCdlbRUGv2FtdflSC2","model":"gpt-4o-2024-05-13","object":"chat.completion","prompt_filter_results":[{"prompt_index":0,"content_filter_results":{"hate":{"filtered":false,"severity":"safe"},"jailbreak":{"filtered":false,"detected":false},"self_harm":{"filtered":false,"severity":"safe"},"sexual":{"filtered":false,"severity":"safe"},"violence":{"filtered":false,"severity":"safe"}}}],"system_fingerprint":"fp_67802d9a6d","usage":{"completion_tokens":82,"prompt_tokens":63,"total_tokens":145}}

3 Use SDK to call Azure OpenAI Service

Typically, it is preferred to consume Azure OpenAI service using SDK. Let’s have a look how we can do that. Please create .env file for all below code examples to configure endpoint and api key. The .env file sits next to the actual program. Source codes of these projects can be downloaded here.

AZURE_OPENAI_ENDPOINT=https://blog-openai.openai.azure.com/

AZURE_OPENAI_API_KEY=Frq6rduglBTVXNxZ8wtoFbtAfxxxxx

AZURE_APIM_API_KEY=23543f01081049e4axxxx

Please replace the above settings with your own details.

AZURE_OPENAI_API_KEYis under Azure OpenAI ->deployments->your model->details->endpoint->keyAZURE_APIM_API_KEYis under APIM ->subscriptions

3.1 Python SDK

We can use AzureOpenAI package in Python, it is pretty straightforward.

3.1.1 Python SDK to call Azure OpenAI

import os

from dotenv import load_dotenv

from openai import AzureOpenAI

load_dotenv()

client = AzureOpenAI(

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version="2024-02-01"

)

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a helpful assistant who talks like a pirate."},

{"role": "user", "content": "Good day, who am I talking to?"},

{"role": "assistant", "content": "Ahoy there, matey! What be bringin ye to these waters today? "},

{"role": "user", "content": "Where is the treasure island?"}

]

)

print(response.choices[0].message.content)

3.1.2 Python SDK to call Azure OpenAI Behind APIM

To call Azure OpenAI Behind APIM, we need to add default_headers to attach additional APIM subscription key header Ocp-Apim-Subscription-Key.

import os

from dotenv import load_dotenv

from openai import AzureOpenAI

load_dotenv()

client = AzureOpenAI(

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version="2024-02-01",

default_headers={"Ocp-Apim-Subscription-Key": os.getenv("AZURE_APIM_API_KEY")}

)

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a helpful assistant who talks like a pirate."},

{"role": "user", "content": "Good day, who am I talking to?"},

{"role": "assistant", "content": "Ahoy there, matey! What be bringin ye to these waters today? "},

{"role": "user", "content": "Where is the treasure island?"}

]

)

print(response.choices[0].message.content)

3.2 Node.js SDK

Similarly, we can use openai package to make API requests in Node.js:

3.2.1 Node.js SDK to call Azure OpenAI

require('dotenv').config();

const { AzureOpenAI } = require("openai");

const endpoint = process.env["AZURE_OPENAI_ENDPOINT"];

const apiKey = process.env["AZURE_OPENAI_API_KEY"];

const apiVersion = "2024-04-01-preview";

const deployment = "gpt-4o";

async function main() {

const client = new AzureOpenAI({ endpoint, apiKey, apiVersion, deployment });

const result = await client.chat.completions.create({

messages: [

{ role: "system", content: "You are a helpful assistant who talks like a pirate." },

{ role: "user", content: "Good day, who am I talking to?" },

{ role: "assistant", content: "Ahoy there, matey! What be bringin ye to these waters today? " },

{ role: "user", content: "Where is the treasure island?" },

],

model: "",

});

for (const choice of result.choices) {

console.log(choice.message);

}

}

main().catch((err) => {

console.error("The sample encountered an error:", err);

});

module.exports = { main };

3.2.2 Node.js SDK to call Azure OpenAI Behind APIM

To call Azure OpenAI Behind APIM, we need to add defaultHeaders to attach additional APIM subscription key header Ocp-Apim-Subscription-Key in the similar way.

require("dotenv").config();

const { AzureOpenAI } = require("openai");

const { createPipelineFromOptions } = require("@azure/core-rest-pipeline");

const endpoint = process.env["AZURE_OPENAI_ENDPOINT"];

const apiKey = process.env["AZURE_OPENAI_API_KEY"];

const subscriptionKey = process.env["AZURE_APIM_API_KEY"];

const apiVersion = "2024-04-01-preview";

const deployment = "gpt-4o";

async function main() {

const client = new AzureOpenAI({ endpoint, apiKey, apiVersion, deployment,

defaultHeaders : { "Ocp-Apim-Subscription-Key": subscriptionKey } });

const result = await client.chat.completions.create({

messages: [

{ role: "system", content: "You are a helpful assistant who talks like a pirate." },

{

role: "user",

content: "Good day, who am I talking to?",

},

{

role: "assistant",

content: "Ahoy there, matey! What be bringin ye to these waters today? ",

},

{ role: "user", content: "Where is the treasure island?" },

],

model: ""

});

for (const choice of result.choices) {

console.log(choice.message);

}

}

main().catch((err) => {

console.error("The sample encountered an error:", err);

});

module.exports = { main };

3.3 C# SDK

We can use the Azure.AI.OpenAI and OpenAI.Chat library in C#.

3.3.1 C# SDK to call Azure OpenAI

using static System.Environment;

using System.ClientModel;

using Azure.AI.OpenAI;

using OpenAI.Chat;

using DotNetEnv;

Env.Load();

string endpoint = GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT");

string key = GetEnvironmentVariable("AZURE_OPENAI_API_KEY");

ApiKeyCredential apiKeyCredential = new ApiKeyCredential(key);

AzureOpenAIClient azureClient = new(

new Uri(endpoint),

apiKeyCredential);

ChatClient chatClient = azureClient.GetChatClient("gpt-4o");

ChatCompletion completion = chatClient.CompleteChat(

new ChatMessage[]

{

new SystemChatMessage("You are a helpful assistant that talks like a pirate."),

new UserChatMessage("Good day, who am I talking to?"),

new AssistantChatMessage("Ahoy there, matey! What be bringin ye to these waters today? "),

new UserChatMessage("Where is the treasure island?")

});

Console.WriteLine($"{completion.Role}: {completion.Content[0].Text}");

3.3.2 C# SDK to call Azure OpenAI Behind APIM

There is a little bit more efforts to call Azure OpenAI Behind APIM in C#, as AzureOpenAIClient does not have additional parameter to pass headers. We need to use PipelinePolicy to attach additional APIM subscription key header Ocp-Apim-Subscription-Key.

using static System.Environment;

using System.ClientModel;

using Azure.AI.OpenAI;

using OpenAI.Chat;

using DotNetEnv;

using System.ClientModel.Primitives;

Env.Load();

string endpoint = GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT");

string key = GetEnvironmentVariable("AZURE_OPENAI_API_KEY");

string subscriptionKey = GetEnvironmentVariable("AZURE_APIM_API_KEY");

ApiKeyCredential apiKeyCredential = new ApiKeyCredential(key);

AzureOpenAIClientOptions clientOptions = new AzureOpenAIClientOptions();

PipelinePolicy customHeaders = new GenericActionPipelinePolicy(requestAction: request =>

{

request.Headers.Set("Ocp-Apim-Subscription-Key", subscriptionKey);

});

clientOptions.AddPolicy(customHeaders, PipelinePosition.PerCall);

AzureOpenAIClient azureClient = new(

new Uri(endpoint),

apiKeyCredential,

clientOptions);

ChatClient chatClient = azureClient.GetChatClient("gpt-4o");

try

{

ChatCompletion completion = chatClient.CompleteChat(

new ChatMessage[]

{

new SystemChatMessage("You are a helpful assistant that talks like a pirate."),

new UserChatMessage("Good day, who am I talking to?"),

new AssistantChatMessage("Ahoy there, matey! What be bringin ye to these waters today? "),

new UserChatMessage("Where is the treasure island?")

});

Console.WriteLine(completion);

Console.WriteLine($"{completion.Role}: {completion.Content[0].Text}");

}

catch (Exception ex)

{

Console.WriteLine($"An error occurred: {ex.Message}");

}

internal class GenericActionPipelinePolicy : PipelinePolicy

{

private Action<PipelineRequest> _requestAction;

private Action<PipelineResponse> _responseAction;

public GenericActionPipelinePolicy(Action<PipelineRequest> requestAction = null, Action<PipelineResponse> responseAction = null)

{

_requestAction = requestAction;

_responseAction = responseAction;

}

public override void Process(PipelineMessage message, IReadOnlyList<PipelinePolicy> pipeline, int currentIndex)

{

_requestAction?.Invoke(message.Request);

ProcessNext(message, pipeline, currentIndex);

_responseAction?.Invoke(message.Response);

}

public override async ValueTask ProcessAsync(PipelineMessage message, IReadOnlyList<PipelinePolicy> pipeline, int currentIndex)

{

_requestAction?.Invoke(message.Request);

await ProcessNextAsync(message, pipeline, currentIndex).ConfigureAwait(false);

_responseAction?.Invoke(message.Response);

}

}

4 Summary

By integrating Azure OpenAI Service behind your own Azure API Management, you not only make the API accessible but also gain essential management capabilities like security, logging, and usage throttling. This setup enables a controlled, secure, and scalable way to harness the power of Azure OpenAI within your applications across different platforms and languages.

The examples provided demonstrate how to make a RESTful API call to the OpenAI service through APIM using different SDKs. Download source code (Python, Node.js and C#)